Elasticsearch搭建_.ds-.logs-deprecation.elasticsearch-default-2023.1-程序员宅基地

技术标签: elasticsearch 运维

一、环境

| 主机名 | IP地址 | 操作系统 | es版本 |

|---|---|---|---|

| es1 | 192.168.10.180 | Centos 7 | elasticsearch7.8.0 |

| es2 | 192.168.10.181 | Centos 7 | elasticsearch7.8.0 |

二、单机部署

1、解压安装包,创建elasticsearch用户,赋权

[root@es1 ~]# ls

elasticsearch-7.8.0-linux-x86_64.tar.gz

[root@es1 ~]# ls

elasticsearch-7.8.0-linux-x86_64.tar.gz

[root@es1 ~]# tar xf elasticsearch-7.8.0-linux-x86_64.tar.gz

[root@es1 ~]# useradd es && passwd es

Changing password for user es.

New password:

BAD PASSWORD: The password is shorter than 8 characters

Retype new password:

passwd: all authentication tokens updated successfully.

[root@es1 ~]# mv elasticsearch-7.8.0 elasticsearch

[root@es1 ~]# chown -R es:es elasticsearch

[root@es1 ~]#

2、修改配置文件

[root@es1 ~]# mv elasticsearch /data/

[root@es1 ~]# cd /data/

[root@es1 data]# ls

elasticsearch

[root@es1 data]# cd /data/elasticsearch/config/

[root@es1 config]# vim elasticsearch.yml

[root@es1 config]# cat elasticsearch.yml

node.name: node-1 ##节点名称

path.data: /data/elasticsearch/data ##数据存放路径

path.logs: /data/elasticsearch/logs ##日志存放路径

bootstrap.memory_lock: true ##避免es使用swap交换分区

indices.requests.cache.size: 5% ##缓存配置

indices.queries.cache.size: 10% ##缓存配置

network.host: 192.168.10.180 ##本机IP

http.port: 9200 ##默认端口

cluster.initial_master_nodes: ["node-1"] ##设置符合主节点条件的节点的主机名或 IP 地址来引导启动集群

http.cors.enabled: true ##跨域

http.cors.allow-origin: "*" ##跨域

3、启动

报错1 没有jdk环境

[root@es1 config]# su es

[es@es1 config]$ cd ../bin/

[es@es1 bin]$ ./elasticsearch -d

could not find java in JAVA_HOME at /usr/local/java/bin/java

解决方法:

方法1:配置文件中添加jdk判断(将JAVA_HOME改为ES_JAVA_HOME)

[es@es1 bin]$ grep "JAVA_HOME" elasticsearch-env

ES_JAVA_HOME="/data/elasticsearch/jdk/"

if [ ! -z "$ES_JAVA_HOME" ]; then

JAVA="$ES_JAVA_HOME/bin/java"

JAVA_TYPE="ES_JAVA_HOME"

方法2:在环境变量中添加es自带的jdk

[root@es2 ~]# grep "JAVA_HOME" /etc/profile

export JAVA_HOME=/data/elasticsearch/jdk/

export PATH=$JAVA_HOME/bin:$PATH

报错2 都是因未对操作系统做优化导致

[es@es1 bin]$ ./elasticsearch -d

[es@es1 bin]$ ERROR: [3] bootstrap checks failed

[1]: max file descriptors [4096] for elasticsearch process is too low, increase to at least [65535]

[2]: memory locking requested for elasticsearch process but memory is not locked

[3]: max virtual memory areas vm.max_map_count [65530] is too low, increase to at least [262144]

ERROR: Elasticsearch did not exit normally - check the logs at /data/elasticsearch/logs/elasticsearch.log

解决方法:优化(只给结果,想了解自行百度)

[root@es1 config]# grep -v "#" /etc/security/limits.conf

* hard memlock unlimited

* soft memlock unlimited

* hard nofile 1024000

* soft nofile 1024000

* hard nproc 1024000

* soft nproc 1024000

[root@es1 config]# grep -v "#" /etc/systemd/system.conf

[Manager]

DefaultLimitNOFILE=65536

DefaultLimitNPROC=32000

DefaultLimitMEMLOCK=infinity

[root@es1 config]# grep -v "#" /etc/sysctl.conf

net.ipv4.tcp_timestamps = 0

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_tw_reuse = 1

net.core.somaxconn = 65535

net.ipv4.tcp_tw_recycle = 1

net.ipv4.tcp_fin_timeout = 5

fs.inotify.max_user_watches = 1048576

fs.inotify.max_user_instances = 256

net.ipv4.tcp_keepalive_time = 30

net.ipv4.ip_local_port_range = 1024 65535

net.ipv4.tcp_max_syn_backlog = 262144

net.ipv4.tcp_max_tw_buckets = 256000

vm.max_map_count = 262144

net.core.netdev_max_backlog = 262144

net.ipv4.tcp_max_orphans = 262144

net.ipv4.tcp_synack_retries = 1

net.ipv4.tcp_syn_retries = 1

##加载配置(ulimit修改完需要断开连接,重开终端)

[root@es1 config]# /bin/systemctl daemon-reload

[root@es1 config]# /sbin/sysctl -p

启动成功

[root@es1 ~]# su es

[es@es1 root]$ cd /data/elasticsearch/bin/

[es@es1 bin]$ ./elasticsearch -d

[es@es1 bin]$ ps -ef | grep elas

es 9544 1 99 16:27 pts/0 00:00:24 /data/elasticsearch/jdk//bin/java -Xshare:auto -Des.networkaddress.cache.ttl=60 -Des.networkaddress.cache.negative.ttl=10 -XX:+AlwaysPreTouch -Xss1m -Djava.awt.headless=true -Dfile.encoding=UTF-8 -Djna.nosys=true -XX:-OmitStackTraceInFastThrow -XX:+ShowCodeDetailsInExceptionMessages -Dio.netty.noUnsafe=true -Dio.netty.noKeySetOptimization=true -Dio.netty.recycler.maxCapacityPerThread=0 -Dio.netty.allocator.numDirectArenas=0 -Dlog4j.shutdownHookEnabled=false -Dlog4j2.disable.jmx=true -Djava.locale.providers=SPI,COMPAT -Xms1g -Xmx1g -XX:+UseG1GC -XX:G1ReservePercent=25 -XX:InitiatingHeapOccupancyPercent=30 -Djava.io.tmpdir=/tmp/elasticsearch-12986234859914472880 -XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=data -XX:ErrorFile=logs/hs_err_pid%p.log -Xlog:gc*,gc+age=trace,safepoint:file=logs/gc.log:utctime,pid,tags:filecount=32,filesize=64m -XX:MaxDirectMemorySize=536870912 -Des.path.home=/data/elasticsearch -Des.path.conf=/data/elasticsearch/config -Des.distribution.flavor=default -Des.distribution.type=tar -Des.bundled_jdk=true -cp /data/elasticsearch/lib/* org.elasticsearch.bootstrap.Elasticsearch -d

es 9655 9544 0 16:27 pts/0 00:00:00 /data/elasticsearch/modules/x-pack-ml/platform/linux-x86_64/bin/controller

es 10179 9050 0 16:27 pts/0 00:00:00 grep --color=auto elas

[es@es1 bin]$

三、集群部署

1、安装es(同上,配置文件加入集群配置)

[root@es1 ~]# cat /data/elasticsearch/config/elasticsearch.yml

cluster.name: es-cluster ##集群名称,所有集群下应用名称需一致,若名称一致则会自动加入集群

node.name: node-1

path.data: /data/elasticsearch/data

path.logs: /data/elasticsearch/logs

bootstrap.memory_lock: true

indices.requests.cache.size: 5%

indices.queries.cache.size: 10%

network.host: 192.168.10.180

http.port: 9200

transport.tcp.port: 9300

discovery.zen.ping.unicast.hosts: ["192.168.10.180:9300", "192.168.10.181:9300"] ##集群内部监听的tcp端口号,默认9300

cluster.initial_master_nodes: ["node-1","node-2"]

http.cors.enabled: true

http.cors.allow-origin: "*"

[root@es2 config]# cat /data/elasticsearch/config/elasticsearch.yml

cluster.name: es-cluster

node.name: node-2

path.data: /data/elasticsearch/data

path.logs: /data/elasticsearch/logs

network.host: 192.168.10.181

http.port: 9200

transport.tcp.port: 9300

discovery.zen.ping.unicast.hosts: ["192.168.10.180:9300", "192.168.10.181:9300"]

cluster.initial_master_nodes: ["node-1", "node-2"]

bootstrap.memory_lock: true

indices.requests.cache.size: 5%

indices.queries.cache.size: 10%

http.cors.enabled: true

http.cors.allow-origin: "*"

[root@es2 config]#

2、启动,验证

[es@es1 bin]$ ps -ef | grep elas

es 16253 1 10 16:50 pts/0 00:00:42 /data/elasticsearch/jdk//bin/java -Xshare:auto -Des.networkaddress.cache.ttl=60 -Des.networkaddress.cache.negative.ttl=10 -XX:+AlwaysPreTouch -Xss1m -Djava.awt.headless=true -Dfile.encoding=UTF-8 -Djna.nosys=true -XX:-OmitStackTraceInFastThrow -XX:+ShowCodeDetailsInExceptionMessages -Dio.netty.noUnsafe=true -Dio.netty.noKeySetOptimization=true -Dio.netty.recycler.maxCapacityPerThread=0 -Dio.netty.allocator.numDirectArenas=0 -Dlog4j.shutdownHookEnabled=false -Dlog4j2.disable.jmx=true -Djava.locale.providers=SPI,COMPAT -Xms1g -Xmx1g -XX:+UseG1GC -XX:G1ReservePercent=25 -XX:InitiatingHeapOccupancyPercent=30 -Djava.io.tmpdir=/tmp/elasticsearch-8972888888703564096 -XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=data -XX:ErrorFile=logs/hs_err_pid%p.log -Xlog:gc*,gc+age=trace,safepoint:file=logs/gc.log:utctime,pid,tags:filecount=32,filesize=64m -XX:MaxDirectMemorySize=536870912 -Des.path.home=/data/elasticsearch -Des.path.conf=/data/elasticsearch/config -Des.distribution.flavor=default -Des.distribution.type=tar -Des.bundled_jdk=true -cp /data/elasticsearch/lib/* org.elasticsearch.bootstrap.Elasticsearch -d

es 16353 16253 0 16:50 pts/0 00:00:00 /data/elasticsearch/modules/x-pack-ml/platform/linux-x86_64/bin/controller

es 31076 12154 0 16:56 pts/0 00:00:00 grep --color=auto elas

[es@es1 bin]$ kill -9 16253

[es@es1 bin]$ ./elasticsearch -d

[es@es1 bin]$ curl http://192.168.10.180:9200/_cat/nodes?v

ip heap.percent ram.percent cpu load_1m load_5m load_15m node.role master name

192.168.10.180 36 98 7 0.31 0.15 0.14 dilmrt * node-1

[es@es1 bin]$ ^C

[es@es1 bin]$ curl http://192.168.10.180:9200/_cat/nodes?v

ip heap.percent ram.percent cpu load_1m load_5m load_15m node.role master name

192.168.10.180 38 98 3 0.19 0.15 0.14 dilmrt * node-1

192.168.10.181 36 78 4 0.29 0.10 0.07 dilmrt - node-2

3、开启用户认证功能

3.1 生成证书

[es@es1 bin]$ ./elasticsearch-certutil cert -out config/elastic-certificates.p12 -pass ""

This tool assists you in the generation of X.509 certificates and certificate

signing requests for use with SSL/TLS in the Elastic stack.

The 'cert' mode generates X.509 certificate and private keys.

* By default, this generates a single certificate and key for use

on a single instance.

* The '-multiple' option will prompt you to enter details for multiple

instances and will generate a certificate and key for each one

* The '-in' option allows for the certificate generation to be automated by describing

the details of each instance in a YAML file

* An instance is any piece of the Elastic Stack that requires an SSL certificate.

Depending on your configuration, Elasticsearch, Logstash, Kibana, and Beats

may all require a certificate and private key.

* The minimum required value for each instance is a name. This can simply be the

hostname, which will be used as the Common Name of the certificate. A full

distinguished name may also be used.

* A filename value may be required for each instance. This is necessary when the

name would result in an invalid file or directory name. The name provided here

is used as the directory name (within the zip) and the prefix for the key and

certificate files. The filename is required if you are prompted and the name

is not displayed in the prompt.

* IP addresses and DNS names are optional. Multiple values can be specified as a

comma separated string. If no IP addresses or DNS names are provided, you may

disable hostname verification in your SSL configuration.

* All certificates generated by this tool will be signed by a certificate authority (CA).

* The tool can automatically generate a new CA for you, or you can provide your own with the

-ca or -ca-cert command line options.

By default the 'cert' mode produces a single PKCS#12 output file which holds:

* The instance certificate

* The private key for the instance certificate

* The CA certificate

If you specify any of the following options:

* -pem (PEM formatted output)

* -keep-ca-key (retain generated CA key)

* -multiple (generate multiple certificates)

* -in (generate certificates from an input file)

then the output will be be a zip file containing individual certificate/key files

Certificates written to /data/elasticsearch/config/elastic-certificates.p12

This file should be properly secured as it contains the private key for

your instance.

This file is a self contained file and can be copied and used 'as is'

For each Elastic product that you wish to configure, you should copy

this '.p12' file to the relevant configuration directory

and then follow the SSL configuration instructions in the product guide.

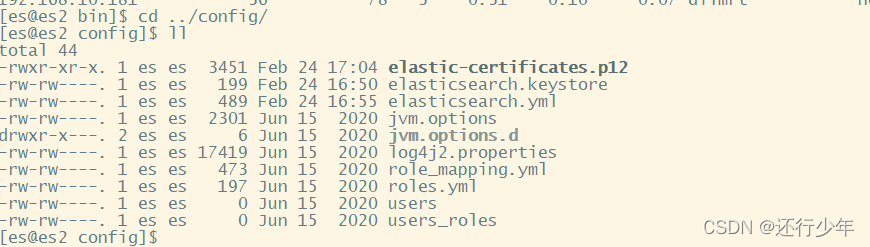

3.2 赋权,传到集群内的其他服务器相同目录

[es@es1 bin]$ cd ../config/

[es@es1 config]$ chmod 755 elastic-certificates.p12

[es@es1 config]$ scp elastic-certificates.p12 192.168.10.181:/data/elasticsearch/config/

3.3 配置文件中开启xpack

[es@es1 config]$ cat /data/elasticsearch/config/elasticsearch.yml

cluster.name: es-cluster

node.name: node-1

path.data: /data/elasticsearch/data

path.logs: /data/elasticsearch/logs

bootstrap.memory_lock: true

indices.requests.cache.size: 5%

indices.queries.cache.size: 10%

network.host: 192.168.10.180

http.port: 9200

transport.tcp.port: 9300

discovery.zen.ping.unicast.hosts: ["192.168.10.180:9300","192.168.10.181:9300"]

cluster.initial_master_nodes: ["node-1","node-2"]

http.cors.enabled: true

http.cors.allow-origin: "*"

xpack.security.enabled: true

xpack.security.transport.ssl.enabled: true

xpack.security.transport.ssl.verification_mode: certificate

xpack.security.transport.ssl.keystore.path: elastic-certificates.p12

xpack.security.transport.ssl.truststore.path: elastic-certificates.p12

3.4 重启es,添加密码

[es@es1 config]$ ps -ef | grep elas

es 4477 12154 0 17:13 pts/0 00:00:00 grep --color=auto elas

es 31755 1 4 16:57 pts/0 00:00:49 /data/elasticsearch/jdk//bin/java -Xshare:auto -Des.networkaddress.cache.ttl=60 -Des.networkaddress.cache.negative.ttl=10 -XX:+AlwaysPreTouch -Xss1m -Djava.awt.headless=true -Dfile.encoding=UTF-8 -Djna.nosys=true -XX:-OmitStackTraceInFastThrow -XX:+ShowCodeDetailsInExceptionMessages -Dio.netty.noUnsafe=true -Dio.netty.noKeySetOptimization=true -Dio.netty.recycler.maxCapacityPerThread=0 -Dio.netty.allocator.numDirectArenas=0 -Dlog4j.shutdownHookEnabled=false -Dlog4j2.disable.jmx=true -Djava.locale.providers=SPI,COMPAT -Xms1g -Xmx1g -XX:+UseG1GC -XX:G1ReservePercent=25 -XX:InitiatingHeapOccupancyPercent=30 -Djava.io.tmpdir=/tmp/elasticsearch-12887036096412054470 -XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=data -XX:ErrorFile=logs/hs_err_pid%p.log -Xlog:gc*,gc+age=trace,safepoint:file=logs/gc.log:utctime,pid,tags:filecount=32,filesize=64m -XX:MaxDirectMemorySize=536870912 -Des.path.home=/data/elasticsearch -Des.path.conf=/data/elasticsearch/config -Des.distribution.flavor=default -Des.distribution.type=tar -Des.bundled_jdk=true -cp /data/elasticsearch/lib/* org.elasticsearch.bootstrap.Elasticsearch -d

es 31854 31755 0 16:57 pts/0 00:00:00 /data/elasticsearch/modules/x-pack-ml/platform/linux-x86_64/bin/controller

[es@es1 config]$ kill -9 31755

[es@es1 config]$ cd ../bin/

[es@es1 bin]$ ./elasticsearch -d

创建密码(最少6位)

[es@es1 bin]$ ./elasticsearch-setup-passwords interactive

Initiating the setup of passwords for reserved users elastic,apm_system,kibana,kibana_system,logstash_system,beats_system,remote_monitoring_user.

You will be prompted to enter passwords as the process progresses.

Please confirm that you would like to continue [y/N]y

Enter password for [elastic]:

Reenter password for [elastic]:

Enter password for [apm_system]:

Reenter password for [apm_system]:

Enter password for [kibana_system]:

Reenter password for [kibana_system]:

Enter password for [logstash_system]:

Reenter password for [logstash_system]:

Enter password for [beats_system]:

Reenter password for [beats_system]:

Enter password for [remote_monitoring_user]:

Reenter password for [remote_monitoring_user]:

Changed password for user [apm_system]

Changed password for user [kibana_system]

Changed password for user [kibana]

Changed password for user [logstash_system]

Changed password for user [beats_system]

Changed password for user [remote_monitoring_user]

Changed password for user [elastic]

测试

[es@es1 bin]$ curl -u "elastic:123456" http://192.168.10.180:9200/_cat/nodes?v

ip heap.percent ram.percent cpu load_1m load_5m load_15m node.role master name

192.168.10.181 13 79 5 0.82 0.88 0.65 dilmrt - node-2

192.168.10.180 14 98 5 0.10 0.14 0.12 dilmrt * node-1

3.5 修改密码

3.5.1 已知现在密码修改

-u 是现在的密码

-d 是将要修改成的密码

[es@es1 bin]$ curl -u "elastic:123456" http://192.168.10.180:9200/_cat/nodes?v

ip heap.percent ram.percent cpu load_1m load_5m load_15m node.role master name

192.168.10.181 13 79 5 0.82 0.88 0.65 dilmrt - node-2

192.168.10.180 14 98 5 0.10 0.14 0.12 dilmrt * node-1

[es@es1 bin]$ curl -H "Content-Type:application/json" -XPOST -u elastic:123456 'http://192.168.10.180:9200/_xpack/security/user/elastic/_password' -d '{ "password" : "1234567" }'

{

}

[es@es1 bin]$ curl -u "elastic:123456" http://192.168.10.180:9200/_cat/nodes?v{

"error":{

"root_cause":[{

"type":"security_exception","reason":"unable to authenticate user [elastic] for REST request [/_cat/nodes?v]","header":{

"WWW-Authenticate":"Basic realm=\"security\" charset=\"UTF-8\""}}],"type":"security_exception","reason":"unable to authenticate user [elastic] for REST request [/_cat/nodes?v]","header":{

"WWW-Authenticate":"Basic realm=\"security\" charset=\"UTF-8\""}},"status":401}

[es@es1 bin]$ curl -u "elastic:1234567" http://192.168.10.180:9200/_cat/nodes?v

ip heap.percent ram.percent cpu load_1m load_5m load_15m node.role master name

192.168.10.181 33 79 3 0.06 0.48 0.57 dilmrt - node-2

192.168.10.180 34 98 3 0.45 0.19 0.15 dilmrt * node-1

3.5.2 忘记密码

创建超级用户

[es@es1 bin]$ ./elasticsearch-users useradd myname -p mypassword -r superuser

[es@es1 bin]$ curl -u myname:mypassword -XPUT 'http://192.168.10.180:9200/_xpack/security/user/elastic/_password?pretty' -H 'Content-Type: application/json' -d '{"password" : "12345678"}'

{

}

[es@es1 bin]$ curl -u "elastic:1234567" http://192.168.10.180:9200/_cat/nodes?v{

"error":{

"root_cause":[{

"type":"security_exception","reason":"unable to authenticate user [elastic] for REST request [/_cat/nodes?v]","header":{

"WWW-Authenticate":"Basic realm=\"security\" charset=\"UTF-8\""}}],"type":"security_exception","reason":"unable to authenticate user [elastic] for REST request [/_cat/nodes?v]","header":{

"WWW-Authenticate":"Basic realm=\"security\" charset=\"UTF-8\""}},"status":401}

[es@es1 bin]$ curl -u "elastic:12345678" http://192.168.10.180:9200/_cat/nodes?vip heap.percent ram.percent cpu load_1m load_5m load_15m node.role master name

192.168.10.181 50 79 3 0.00 0.18 0.41 dilmrt - node-2

192.168.10.180 50 98 3 0.95 0.65 0.36 dilmrt * node-1

[es@es1 bin]$

四、ES升级

1 备份旧版本

[root@localhost data]# ps -ef | grep elas

es 26795 1 4 15:21 ? 00:02:00 /usr/local/java/bin/java -Xshare:auto -Des.networkaddress.cache.ttl=60 -Des.networkaddress.cache.negative.ttl=10 -XX:+AlwaysPreTouch -Xss1m -Djava.awt.headless=true -Dfile.encoding=UTF-8 -Djna.nosys=true -XX:-OmitStackTraceInFastThrow -Dio.netty.noUnsafe=true -Dio.netty.noKeySetOptimization=true -Dio.netty.recycler.maxCapacityPerThread=0 -Dio.netty.allocator.numDirectArenas=0 -Dlog4j.shutdownHookEnabled=false -Dlog4j2.disable.jmx=true -Djava.locale.providers=SPI,JRE -Xms3g -Xmx3g -XX:+UseConcMarkSweepGC -XX:CMSInitiatingOccupancyFraction=75 -XX:+UseCMSInitiatingOccupancyOnly -Djava.io.tmpdir=/tmp/elasticsearch-3796414330494870773 -XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=data -XX:ErrorFile=logs/hs_err_pid%p.log -XX:+PrintGCDetails -XX:+PrintGCDateStamps -XX:+PrintTenuringDistribution -XX:+PrintGCApplicationStoppedTime -Xloggc:logs/gc.log -XX:+UseGCLogFileRotation -XX:NumberOfGCLogFiles=32 -XX:GCLogFileSize=64m -XX:MaxDirectMemorySize=1610612736 -Des.path.home=/data/elasticsearch -Des.path.conf=/data/elasticsearch/config -Des.distribution.flavor=default -Des.distribution.type=tar -Des.bundled_jdk=true -cp /data/elasticsearch/lib/* org.elasticsearch.bootstrap.Elasticsearch -d

es 26823 26795 0 15:21 ? 00:00:00 /data/elasticsearch/modules/x-pack-ml/platform/linux-x86_64/bin/controller

root 29695 29495 0 16:05 pts/1 00:00:00 grep --color=auto elas

[root@localhost data]# pkill -9 java

[root@localhost data]# ps -ef | grep elas

root 29752 29495 0 16:06 pts/1 00:00:00 grep --color=auto elas

2 解压新版本,替换旧版本

[root@localhost ~]# tar xf elasticsearch-7.16.2-linux-x86_64.tar.gz

[root@localhost ~]# mv elasticsearch-7.16.2 /data/elasticsearch

3 修改新版本的配置文件(拷贝旧版本的配置)

[root@localhost data]# cat /data/elasticsearch7.8.0/config/elasticsearch.yml > /data/elasticsearch/config/elasticsearch.yml

[root@localhost data]# cp -a /data/elasticsearch7.8.0/data /data/elasticsearch/

[root@localhost data]# cp -a /data/elasticsearch7.8.0/es_backup /data/elasticsearch/

[root@localhost config]# cp -a /data/elasticsearch7.8.0/config/elastic-certificates.p12 /data/elasticsearch/config/

4 修改新版本的属主

[root@localhost data]# chown -R es:es /data/elasticsearch

5 启动

[root@localhost config]# su es

[es@localhost config]$ cd /data/elasticsearch/bin/

[es@localhost bin]$ ./elasticsearch -d

6 测试

[es@localhost bin]$ curl -u elastic:123456 http://192.168.10.180:9200

{

"name" : "node-1",

"cluster_name" : "elasticsearch",

"cluster_uuid" : "JFPQN70-RLaRCUIGYS5VuQ",

"version" : {

"number" : "7.16.2",

"build_flavor" : "default",

"build_type" : "tar",

"build_hash" : "2b937c44140b6559905130a8650c64dbd0879cfb",

"build_date" : "2021-12-18T19:42:46.604893745Z",

"build_snapshot" : false,

"lucene_version" : "8.10.1",

"minimum_wire_compatibility_version" : "6.8.0",

"minimum_index_compatibility_version" : "6.0.0-beta1"

},

"tagline" : "You Know, for Search"

}

[es@localhost bin]$ curl -u elastic:123456 http://192.168.10.180:9200/_cat/indices?v

health status index uuid pri rep docs.count docs.deleted store.size pri.store.size

green open .geoip_databases 1D0788VhT6u_Vg9G-22T5g 1 0 44 0 41.5mb 41.5mb

green open .security-7 StBKKdhJQ6ySV-2lH6yJsg 1 0 7 0 23.9kb 23.9kb

五、es备份和恢复

1、 基于快照的方式

1.1 创建镜像仓库和镜像(所有节点)

1.1.1 新建目录作为镜像仓库并修改权限为es用户

##该目录使用共享的方式(nfs,glusterfs)

[root@localhost data]# mkdir -p /data/elasticsearch/es_backup

[root@localhost data]# chown -R es:es /data/elasticsearch/es_backup

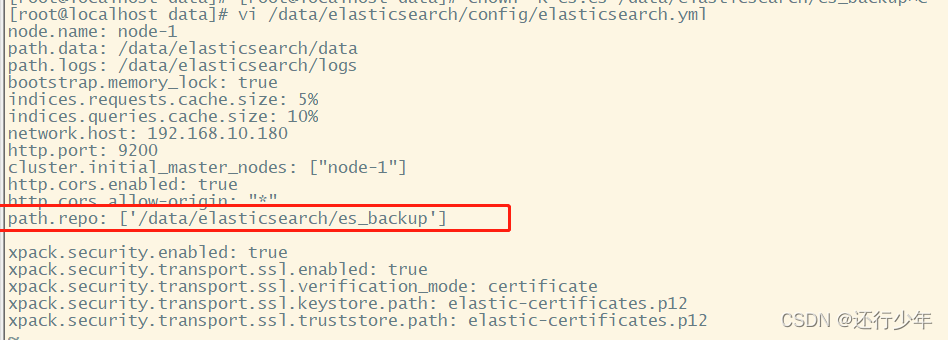

1.1.2 修改所有es的配置文件,添加仓库地址,重启es

1.1.3 创建镜像仓库

[root@localhost data]# curl -XPUT -u elastic:123456 'http://192.168.10.180:9200/_snapshot/my_backup' -H "Content-Type: application/json" -d '{

"type": "fs",

"settings": {

"location": "/data/elasticsearch/es_backup",

"compress": true

}

}'

{

"acknowledged":true}

curl -XGET -u elastic:'123456' 'http://192.168.10.180:9200/_snapshot/_all?

pretty' #查看所有仓库

curl -XGET -u elastic:'123456' 'http://192.168.10.180:9200/_cat/repositories?v'

#查看仓库列表

curl -XGET -u elastic:'123456'

'http://192.168.10.180:9200/_snapshot/my_backup' #查看指定仓库

curl -XDELETE -u elastic:'123456'

'http://192.168.10.180:9200/_snapshot/my_backup?pretty' #删除仓库

1.1.4 创建索引

[root@localhost ~]# curl -XPUT -u elastic:123456 '192.168.10.180:9200/index-demo/test/1?pretty' -H 'content-Type:application/json' -d '{"user":"zhangsan","mesg":"hello world"}'

{

"_index" : "index-demo",

"_type" : "test",

"_id" : "1",

"_version" : 1,

"result" : "created",

"_shards" : {

"total" : 2,

"successful" : 1,

"failed" : 0

},

"_seq_no" : 0,

"_primary_term" : 1

}

[root@localhost ~]# curl -u elastic:'123456' "192.168.10.180:9200/_cat/indices?v"health status index uuid pri rep docs.count docs.deleted store.size pri.store.size

green open .geoip_databases 1D0788VhT6u_Vg9G-22T5g 1 0 44 0 41.5mb 41.5mb

green open .security-7 StBKKdhJQ6ySV-2lH6yJsg 1 0 7 0 23.9kb 23.9kb

yellow open index-demo l9gCn03zQ0qR7kbU3XEA9Q 1 1 1 0 4.5kb 4.5kb

1.1.5 创建镜像快照

[root@localhost ~]# curl -X PUT -u elastic:'123456' "192.168.10.180:9200/_snapshot/my_backup/es_backup-20220330?wait_for_completion=true&pretty"

{

"snapshot" : {

"snapshot" : "es_backup-20220330",

"uuid" : "Tv1hwsGRQpS5duLRYap39w",

"repository" : "my_backup",

"version_id" : 7160299,

"version" : "7.16.2",

"indices" : [

".ds-.logs-deprecation.elasticsearch-default-2022.03.30-000001",

".ds-ilm-history-5-2022.03.30-000001",

".security-7",

"index-demo",

".geoip_databases"

],

"data_streams" : [

"ilm-history-5",

".logs-deprecation.elasticsearch-default"

],

"include_global_state" : true,

"state" : "SUCCESS",

"start_time" : "2022-03-30T02:51:29.910Z",

"start_time_in_millis" : 1648608689910,

"end_time" : "2022-03-30T02:51:30.111Z",

"end_time_in_millis" : 1648608690111,

"duration_in_millis" : 201,

"failures" : [ ],

"shards" : {

"total" : 5,

"failed" : 0,

"successful" : 5

},

"feature_states" : [

{

"feature_name" : "geoip",

"indices" : [

".geoip_databases"

]

},

{

"feature_name" : "security",

"indices" : [

".security-7"

]

}

]

}

}

## 监视任何当前正在运行的快照

curl -XGET -u elastic:'123456'

"http://192.168.10.180:9200/_snapshot/my_backup/_current?pretty"

## 要获得参与任何当前正在运行的快照的每个分片的完整细分

curl -XGET -u elastic:'123456' 'http://192.168.10.180:9200/_snapshot/_status'

## 查看仓库中所有的镜像

curl -XGET -u elastic:'123456'

'http://192.168.10.180:9200/_snapshot/my_backup/_all?pretty'

## 获取特定存储库中的快照列表,这也会返回每个快照的内容。

curl -X GET -u elastic:'123456' "192.168.10.180:9200/_snapshot/my_backup/*?

verbose=false&pretty"

## 删除指定镜像

curl -X DELETE -u elastic:'123456'

"192.168.10.180:9200/_snapshot/my_backup/es_backup-20220330"

1.1.6 删除索引

[root@localhost ~]# curl -X DELETE -u elastic:123456 "192.168.10.180:9200/index-demo"

{

"acknowledged":true}

[root@localhost ~]# curl -u elastic:'123456' "192.168.10.180:9200/_cat/indices?v"

health status index uuid pri rep docs.count docs.deleted store.size pri.store.size

green open .geoip_databases 1D0788VhT6u_Vg9G-22T5g 1 0 44 0 41.5mb 41.5mb

green open .security-7 StBKKdhJQ6ySV-2lH6yJsg 1 0 7 0 23.9kb 23.9kb

1.1.7 恢复单个索引

[root@localhost ~]# curl -X GET -u elastic:'123456' "192.168.10.180:9200/_snapshot/my_backup/*?verbose=false&pretty"

{

"snapshots" : [

{

"snapshot" : "es_backup-20220329",

"uuid" : "vfgjBu5tScCQzdNlP0swaA",

"repository" : "my_backup",

"indices" : [

".geoip_databases",

".security-7"

],

"data_streams" : [ ],

"state" : "SUCCESS"

},

{

"snapshot" : "es_backup-20220330",

"uuid" : "Tv1hwsGRQpS5duLRYap39w",

"repository" : "my_backup",

"indices" : [

".ds-.logs-deprecation.elasticsearch-default-2022.03.30-000001",

".ds-ilm-history-5-2022.03.30-000001",

".geoip_databases",

".security-7",

"index-demo"

],

"data_streams" : [ ],

"state" : "SUCCESS"

}

],

"total" : 2,

"remaining" : 0

}

[root@localhost ~]# curl -X POST -u elastic:'123456' "192.168.10.180:9200/_snapshot/my_backup/es_backup-20220330/_restore?pretty" -H 'Content-Type: application/json' -d' { "indices": "index-demo" }'

{

"accepted" : true

}

[root@localhost ~]# curl -u elastic:'123456' "192.168.10.180:9200/_cat/indices?v" health status index uuid pri rep docs.count docs.deleted store.size pri.store.size

green open .geoip_databases 1D0788VhT6u_Vg9G-22T5g 1 0 44 0 41.5mb 41.5mb

green open .security-7 StBKKdhJQ6ySV-2lH6yJsg 1 0 7 0 23.9kb 23.9kb

yellow open index-demo j9Y6KTE8QiSuUCMdW6pn1g 1 1 1 0 4.5kb 4.5kb

1.1.8 异机恢复索引

异机需要创建镜像仓库和复制一份文件到仓库底下

[root@mysql2 indices]# curl -XPUT -u elastic:123456 'http://192.168.10.181:9200/_snapshot/my_backup' -H "Content-Type: application/json" -d '{

"type": "fs",

"settings": {

"location": "/data/elasticsearch/es_backup",

"compress": true

}

}'

{"acknowledged":true}[root@mysql2 indices]#

[root@mysql2 indices]#

[root@mysql2 indices]#

[root@mysql2 indices]# curl -X GET -u elastic:'123456' "192.168.10.181:9200/_snapshot/my_backup/*?verbose=false&pretty"{

"snapshots" : [

{

"snapshot" : "es_backup-20220329",

"uuid" : "vfgjBu5tScCQzdNlP0swaA",

"repository" : "my_backup",

"indices" : [

".geoip_databases",

".security-7"

],

"data_streams" : [ ],

"state" : "SUCCESS"

},

{

"snapshot" : "es_backup-20220330",

"uuid" : "Tv1hwsGRQpS5duLRYap39w",

"repository" : "my_backup",

"indices" : [

".ds-.logs-deprecation.elasticsearch-default-2022.03.30-000001",

".ds-ilm-history-5-2022.03.30-000001",

".geoip_databases",

".security-7",

"index-demo"

],

"data_streams" : [ ],

"state" : "SUCCESS"

}

],

"total" : 2,

"remaining" : 0

}

[root@mysql2 ~]# curl -u elastic:'123456' "192.168.10.181:9200/_cat/indices?v"

health status index uuid pri rep docs.count docs.deleted store.size pri.store.size

green open .geoip_databases jrIHBN1KTfqDAgKKeFZP8w 1 0 40 0 38mb 38mb

green open .security-7 Ahj0JDaARPq-OslsHxhohw 1 0 7 0 25.7kb 25.7kb

[root@mysql2 ~]# curl -X POST -u elastic:'123456' "192.168.10.181:9200/_snapshot/my_backup/es_backup-20220330/_restore?pretty" -H 'Content-Type: application/json' -d' { "indices": "index-demo" }'

{

"accepted" : true

}

[root@mysql2 ~]# curl -u elastic:'123456' "192.168.10.181:9200/_cat/indices?v"

health status index uuid pri rep docs.count docs.deleted store.size pri.store.size

green open .geoip_databases jrIHBN1KTfqDAgKKeFZP8w 1 0 40 0 38mb 38mb

green open .security-7 Ahj0JDaARPq-OslsHxhohw 1 0 7 0 25.7kb 25.7kb

yellow open index-demo 5-bajJbvSYyjODxAxFntQA 1 1 1 0 4.5kb 4.5kb

[root@mysql2 ~]#

2、基于esdump的方式

智能推荐

CSS背景特殊属性值-程序员宅基地

文章浏览阅读52次。CSS代码示例-背景附着属性(background-attachment)-[背景图固定不动,不跟随滚动条滚动]:<html><head><title>背景附着属性 background-attachment</title><style type="text/css">body {background-image:url(../image..._背景附着方式的属性值

Python-第一阶段-第二章 字面量-程序员宅基地

文章浏览阅读863次,点赞24次,收藏18次。Python字面量

《算法导论》第2章 算法基础(插入排序、归并排序、复杂度计算)_61,55,97,30,38,58两轮选择递增排序法-程序员宅基地

文章浏览阅读1k次。(最近在自己学习《算法导论》一本书,之前本来喜欢手写笔记,但是随即发现自己总是把笔记弄丢,所以打算做一个电子版的笔记)(另外书中用的都是伪代码,笔记中如果需要尝试的地方都是python代码)2.1 插入排序 基本思想:将待排序的数列看成两个部分(以从小到大为例),前一半是排序完成的,后一半是乱序的,对于乱序的第一个,开始和前一半里最大的数字、第二大的数字……依次比较,等到合适的位置就将它放进去。然后比对过的数字向后移动一位,相应的排序完成的长度加一,没有排序的减一。如:5 |..._61,55,97,30,38,58两轮选择递增排序法

把这份关于Android Binder原理一系列笔记研究完,进大厂是个“加分项”(2)-程序员宅基地

文章浏览阅读674次,点赞21次,收藏21次。可以看出,笔者的工作学习模式便是由以下。

Vue实战(三):实现树形表格_vue树形表格组件-程序员宅基地

文章浏览阅读1.1k次。实现树形表格_vue树形表格组件

Linux平台下很实用的44个Linux命令-程序员宅基地

文章浏览阅读237次。Linux平台下很实用的44个Linux命令大家好,今天再继续和大家说下基础的命令,实在是不知道基础的东西还有什么是应该和大家讲的了,要是再开基础的东西,我觉得就得和大家说交换机和路由器什么的了。今天和大家说一下linux运维其实一般来说,能精通100+的命令,就是一个合格的运维人员了,意思就是你的基础已经差不多了。但是在实际运维工作中需要经常运用到的一些命令,今天就和大家简单的说一下,因

随便推点

2023年Java华为OD真题机考题库大全-带答案(持续更新)_华为od机试题-程序员宅基地

文章浏览阅读1.2w次,点赞16次,收藏149次。2023年华为OD真题目前华为社招大多数是OD招聘,17级以下都为OD模式,OD模式也是华为提出的一种新的用工形式,定级是13-17级,属于华为储备人才,每年都会从OD项目挑优秀员工转为正编。D1-D5对应薪资10K-35K左右,年终奖2-4个月,周六加班双倍工资,下个月发。入职OD会有一定薪资上涨,之后每年一次加薪,OD转华为一次加薪。等不到转正机会,相对于内部员工来说,容易被裁,不稳定,可能接触不到核心项目,功能。具体转条件:连续N个季度绩效为A,部门有转正名额,排队。_华为od机试题

python selenium自动化之chrome与chromedriver版本兼容问题_chrome版本122.0.6261.112和chromdriver 107.0.5304.62兼容-程序员宅基地

文章浏览阅读2.7k次,点赞3次,收藏10次。在我们使用python+selenium来驱动chrome浏览器时,需要有chromedriver的支持,但是chrome浏览器更新比较频繁,而chrome浏览器和chromedriver则需要保持版本一致(版本一般相差1以内),此时我们就需要手动下载chromedriver来匹配此时的浏览器,但是生产环境操作比较麻烦。此时,我们就想是不是有一个程序来代替我们完成这个工作呢?思路比较当前的chrome浏览器版本号与chromedriver浏览号如果不匹配,则下载一个新的chromedriver替换掉_chrome版本122.0.6261.112和chromdriver 107.0.5304.62兼容吗

测试人员如何规划自己的职业生涯,分享我这些年的测开的总结给大家参考~_测开个人成长计划-程序员宅基地

文章浏览阅读2.7k次,点赞2次,收藏11次。负责开发项目的技术方法。我的一位同事曾经很认真地问过我一个问题,他说他现在从事软件测试工作已经4年了,但是他不知道现在的工作和自己在工作3年时有什么不同,他想旁观者清,也许我能回答他的问题。随着互联网的飞快发展,IT行业出现了日新月异的变化,新的技术会不断出现,你熟练掌握的软件测试技术很快就过时了。至于第三点说的实践和思考就是你对自己学到的东西的一个掌握的程度的检验了,只有实践了你才能知道,这个知识点你到底学会了没有,会了之后有没有什么其他的理解,这个就是需要自己去思考了 ,这种东西都是别人教不了你的!_测开个人成长计划

MATLAB代码:多微网电能互补与需求响应的微网双层优化模型——动态定价与能量管理_配电网和微电网的matlab模型-程序员宅基地

文章浏览阅读734次,点赞21次,收藏13次。主要内容:代码主要做的是考虑多微网电能互补共享的微网双层优化模型,同时优化配电网运营商的动态电价以及微网用户的能量管理策略,在上层,目标函数为配电网运营商的收益最大化,决策变量为配电网运营商的交易电价;主要内容:代码主要做的是考虑多微网电能互补共享的微网双层优化模型,同时优化配电网运营商的动态电价以及微网用户的能量管理策略,在上层,目标函数为配电网运营商的收益最大化,决策变量为配电网运营商的交易电价;最后,我们输出最终的结果,包括最优的用电费用、配网运营商的收益以及每个微网的用电费用分配情况。_配电网和微电网的matlab模型

基于双极性SPWM调制的三相电压型桥式逆变电路原理解析-程序员宅基地

文章浏览阅读378次,点赞3次,收藏3次。首先介绍了逆变电路的基本原理和应用领域,然后详细分析了双极性SPWM调制方式的工作原理和优势。本文通过对三相电压型桥式逆变电路和双极性SPWM调制方式的技术分析,深入探讨了其在电力系统中的应用和性能评估。双极性SPWM调制方式是SPWM调制方式的一种改进形式,它能够更好地抑制谐波,提高逆变电路的输出质量。面对电力系统的不断发展和需求的变化,逆变电路和SPWM调制方式也在不断演进。为了评估三相电压型桥式逆变电路和双极性SPWM调制方式的性能,本节将详细分析其输出波形的失真程度、功率损耗和效率等关键指标。

用cmake 编译 xcode用的clucene静态库(一)_clucene-config.h-程序员宅基地

文章浏览阅读4.5k次。第一步、下载源代码 http://sourceforge.net/projects/clucene/ 第二步、下载cmakehttp://www.cmake.org/cmake/resources/software.html 编译第一步,打开在应用程序中的cmake GUI程序,设置好源代码路径,和输出路径,如图: 第二步,点击Configure,在_clucene-config.h