LLMs:《Instruction Tuning for Large Language Models: A Survey—大型语言模型的指令调优的综述》翻译与解读-程序员宅基地

技术标签: NLP/LLMs 语言模型 人工智能 AI/AGI 自然语言处理

LLMs:《Instruction Tuning for Large Language Models: A Survey—大型语言模型的指令调优的综述》翻译与解读

导读:2023年8月21日,浙江大学等团队,发布了《Instruction Tuning for Large Language Models: A Survey》。指令微调是在大规模语言模型的基础上,使用包含(指令,输出)的监督数据进行进一步训练,以减小模型原有的预测目标与用户指令之间的差距。其目的是增强模型的能力和可控性。

>> 指令微调的方法,包括构建指令数据集、进行指令微调等。构建指令数据集可基于现有数据集转换,也可以使用语言模型自动生成。指令微调则是在指令数据集上进行监督训练。

>> 指令数据集的类型,包括自然指令、非自然指令、跨语言指令、对话指令等多种类型。

>> 应用指令微调的语言模型,如InstructGPT、Alpaca、Vicuna等在大型预训练语言模型基础上进行指令微调的模型。

>> 指令微调的效果评估、分析和批评,需要关注指令数据集的质量、指令学习是否只停留在表面模仿等问题。

>> 提高指令微调效率的方法,如基于适配器、重参数化等方法来进行高效微调。

LLMs指令微调技术通过构建丰富的指令数据集和采用有监督学习的方式,能有效提升开源LLMs的能力和可控性。主要技术点包括构建多种指令数据集方式自然指令、非自然指令以及多模态指令等,采用指令微调的方法对LLMs进行微调,例如基于GPT、T5、LLaMA等骨干模型,采用LOMO、DELTA微调等高效微调技术。指令微调取得很好效果,但是否只是学习表面模式尚存在争议,未来应注重提升指导质量和多方面评估。

目录

LLMs之Data:指令微调的简介、Self Instruction思想(一种生成指令数据集的方法论—主要用在指令微调阶段)的简介、Alpaca/BELLE应用、实战案例代码实现之详细攻略

2023年8月21日—Paper:《Instruction Tuning for Large Language Models: A Survey—大型语言模型的指令调优的综述》翻译与解读

《Instruction Tuning for Large Language Models: A Survey—大型语言模型的指令调优的综述》翻译与解读

指令微调技术(增强LLM的能力和可控性,有监督微调+增量训练)、指令对

LLM显著进展(GPT-3→PaLM→LLaMA)、当前痛点(训练目标与用户目标间的不匹配)、

提出指令微调技术(解决不匹配)、指令微调的3个好处(弥合误差+为人类提供介入模型行为的渠道+性价比高)

指令微调的3大挑战:高质量性、改善严重依赖数据性、可能只学皮毛性

2.1、Instruction Dataset Construction指令数据集构建:

数据实例三元素:instruction【指定任务】、input【补充上下文】、output【预期输出】

2.2、Instruction Tuning指令微调:有监督的训练

3.1、Natural Instructions自然指令:来自193K个实例和61个NLP任务,2元组{输入,输出}

3.2、P3公共提示池:整合170个英语NLP数据集和2052个英语提示,三元组{“输入”【描述任务】+“答案选择”【响应列表】+“目标”【正确响应】}

3.3、xP3跨语言公共提示池:46种语言中16类NLP任务,2元组{输入和目标}

3.4、Flan 2021:将63个NLP基准转换为输入-输出对进而构建,2元组{输入+目标}

3.5、Unnatural Instructions非自然指令:基于InstructGPT构建的24万个实例,4元组{指令+输入+约束+输出}

LLMs之Data:指令微调的简介、Self Instruction思想(一种生成指令数据集的方法论—主要用在指令微调阶段)的简介、Alpaca/BELLE应用、实战案例代码实现之详细攻略

包含基于InstructGPT的52K个训练指令和252个评估指令,3元组{“指令”【定义任务】+“输入”【指令的内容补充】+“输出”【正确结果】}

形成过程:基于52K的初始集→随机选择1个进化策略让ChatGPT重写指令→过滤未进化的指令对(利用ChatGPT和规则)→利用新生成进化指令对更新数据集→重复上述四次→收集了25万个指令对

3.8、LIMA:包含1K数据实例的训练集(75%源自3个社区问答网站)和300个实例的测试集,二元组{instruction, response}

3.9、Super-Natural Instructions超级自然指令:包含1616个NLP任务和500万个任务实例+涵盖76种任务类型和55种语言,二元组(“指令”和“任务实例”)

3.10、Dolly:包含15000个人工生成英语指令+7种特定类型

3.11、OpenAssistant Conversations

包含158K条消息(90K个用户提示+68K个助手回复),35种语言中65K个对话树+450K个人工注释的质量评分,对话树(节点,路径/线程)

五步流程收集对话树:提示者→标记提示→扩展树节点→标记回复→排名

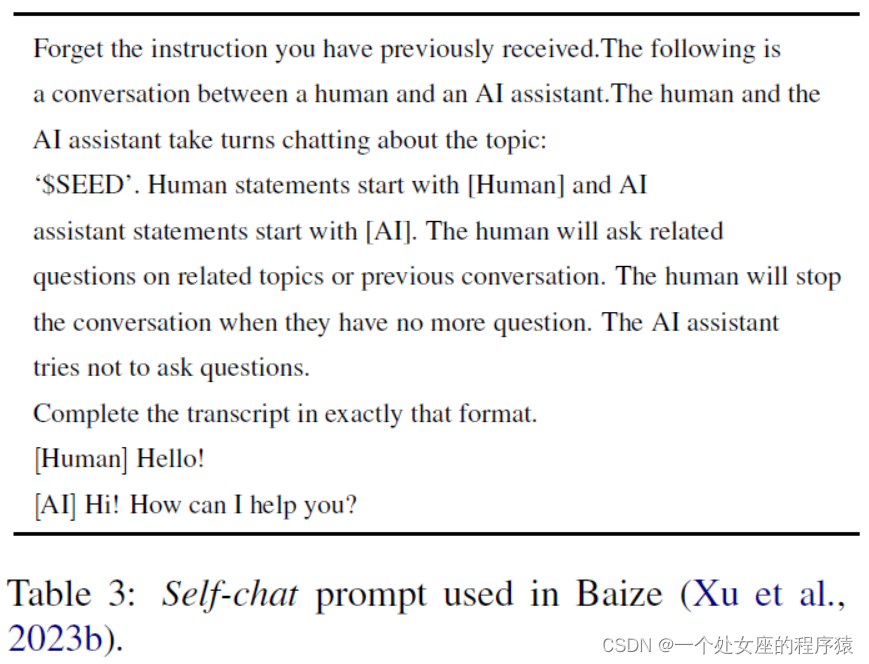

3.12、Baize:基于ChatGPT(self-chat思想)构建的111.5K个实例多轮(3.4轮)聊天语料库,二元组{prompt,response}

4、Instruction Fine-tuned LLMs指导微调的LLM模型

4.1、InstructGPT:基于GPT-3模型+人类指导微调

LLMs之InstructGPT:《Training language models to follow instructions with human feedback》翻译与解读

微调三步骤(基于人类筛选指令进行SFT→基于一个instruction多个降序的responses来训练RM模型→利用RL的PPO策略优化RM模型)

InstructGPT的真实性、毒性、模型性能等表现非常出色

4.2、BLOOMZ:基于BLOOM模型+指令数据集xP3,多种任务及其数据集上表现均超于BLOOM

LLMs:《BLOOM: A 176B-Parameter Open-Access Multilingual Language Model》翻译与解读

4.3、Flan-T5:基于T5模型+FLAN数据集微调,基于JAX的T5X框架+128*TPU v4=37小时

4.4、Alpaca:基于LLaMA模型+利用InstructGPT生成指令数据集进行微调,8*A100-80G设备+混合精度AMP+DP=3小时

LLMs之Alpaca:《Alpaca: A Strong, Replicable Instruction-Following Model》翻译与解读

LLMs之Vicuna:《Vicuna: An Open-Source Chatbot Impressing GPT-4 with 90%* ChatGPT Quality》翻译与解读

AIGC之GPT-4:GPT-4的简介(核心原理/意义/亮点/技术点/缺点/使用建议)、使用方法、案例应用(计算能力/代码能力/看图能力等)之详细攻略

4.8、WizardLM:基于LLaMA模型+Evol-Instruct指令数据集(ChatGPT生成)微调,8*V100 GPU+Deepspeed Zero-3技术+3个epochs =70小时

LLMs之ChatGLM2:ChatGLM2-6B的简介、安装、使用方法之详细攻略

4.10、LIMA:基于LLaMA模型+基于表面对齐假设构建的指令数据集,提出了表面对齐假设并验证了其效果

LLMs:《OPT: Open Pre-trained Transformer Language Models》翻译与解读

Dolly 2:基于Pythia模型+微调databricks-dolly-15k指令数据集

Falcon-Instruct:基于Falcon模型+微调英语对话数据集(Baize数据集150M/1.5亿tokens+RefinedWeb数据集),降内存(Flash Attention+MQ)

Guanaco:基于LLaMA+微调多语言对话数据集(源自包含52K英文指令数据对的Alpaca+534K的多轮对话的多语言)

LLMs之Guanaco:《QLoRA:Efficient Finetuning of Quantized LLMs》翻译与解读

Minotaur:基于Starcoder Plus模型+微调WizardLM和GPTeacher-General-Instruc指令数据集

Nous-Herme:基于LLaMA模型+微调BiologyPhysicsChemistry子集的300K个指令

YuLan-Chat:基于LLaMA模型+微调双语数据集(25万个中英文指令对)

Airoboros:基于LLaMA+微调Self-instruct数据集

5、Multi-modality Instruction Fine-tuning多模态指令微调

5.1、Multi-modality Datasets多模态数据集

MUL-TIINSTRUCT—多模态指令微调数据集—OFA模型:由62个不同的多模态任务组成+统一的序列到序列格式

PMC-VQA—大规模的医学视觉问答数据集—MedVInT模型:227k个图像-问题对和149k个图像,从PMC-OA收集图像-标题对+ChatGPT生成问题-答案对+手工验证

LAMM—2D图像和3D点云理解:包含186K个语言-图像指令-响应对,以及10K个语言-点云指令-响应对

5.2、Multi-modality Instruction Fine-tuning Models多模态指令微调模型

InstructPix2Pix条件扩散模型:基于Stable Diffusion+微调多模态数据集(综合两大模型能力【GPT-3、Stable Diffusion】来生成)

LLaVA:基于CLIP视觉编码器和LLaMA语言解码器模型+微调158K个独特的语言-图像指令-跟随样本的教学视觉语言数据集(利用GPT-4转换格式)

Video-LLaMA多模态框架:由两个分支编码器组成(视觉-语言VL分支和音频-语言AL分支+语言解码器LLaMA)

InstructBLIP视觉-语言指令微调框架:基于BLIP-2模型(图像编码器+LLM+Query Transformer)

Otter:基于OpenFlamingo模型+只微调Perceiver重采样模块、交叉注意力层和输入/输出嵌入

6、Domain-specific Instruction Finetuning特定领域指令微调

6.1、Dialogue对话—InstructDial、LINGUIST模型:每个任务实例{任务描述、实例输入、约束、指令和输出}+两个元任务(指令选择任务+指令二元任务)

CoEdIT辅助写作:基于对FLANT模型+微调在文本编辑的指令数据集,两元组{指令:源,目标}

CoPoet协作的诗歌写作工具:基于T5模型+微调诗歌写作数据集,两元组{指令,诗行}

2023年6月14日,Radiology-GPT针对放射学领域:基于Alpaca+微调放射学领域知识数据集,两元组{发现,结论}

2023年4月18日,ChatDoctor:基于LLaMA模型+微调Alpaca指令数据集和HealthCareMagic100k患者-医生对话数据集且检索外部知识数据库

2023年3月,ChatGLM-Med:基于ChatGLM模型+微调中国医学指令数据集(基于GPT3.5的API和医学知识图谱创建问题-答案对)

6.7、Arithmetic算术:Goat=基于LLaMA模型+微调算术问题数据集(ChatGPT生成数百个指令+自然语言问答的形式表达)

6.8、Code代码:WizardCoder=基于StarCoder模型+Evol-Instruct方法+微调Code Alpaca数据集,3元组{指令、输入、期望输出}

LLMs之Code:SQLCoder的简介、安装、使用方法之详细攻略

2023年,LLMs之Code:Code Llama的简介(衍生模型如Phind-CodeLlama/WizardCoder)、安装、使用方法之详细攻略

LLMs之Law:大语言模型领域行业场景应用之大模型法律行业的简介、主流LLMs(PowerLawGLM/ChatLaw)、经典应用之详细攻略

7、Efficient Tuning Techniques高效微调技术

7.1、基于重参数化—LoRA=基于DeepSpeed框架+训练低维度的A和B→可训练参数比完全微调少得多(LoRA训练GPT-3可降低到千分之一)

7.2、基于添加式—HINT=添加易于微调的模块(基于超网络数生成器生成适配器和前缀参数)+插入到骨干模型作为高效的微调模块

7.3、基于重参数化—QLoRA=LoRA的量化版+NF4+双量化DQ+分页优化器PO

7.4、基于重参数化—LOMO=降低梯度内存需求(融合梯度计算与参数更新+实时只存储单个参数的梯度)+稳定训练(梯度值裁剪+分离梯度范数计算+态损失缩放)+节省内存(激活检查点+ZeRO优化)

7.5、基于规范化—Delta-tuning=优化和最优控制视角+将微调限制在低维流形上来执行子空间优化+微调参数充当最优控制器+在下游任务中引导模型行为

8、Evaluation, Analysis and Criticism评估、分析和批评

8.1、HELM Evaluation:整体评估+提高LM透明度+关注三因素(广泛性+多指标性+标准化)

8.2、Low-resource Instruction Tuning低资源指令微调:STL需要数据量的25%、MTL需要数据量的6%

8.3、Smaller Instruction Dataset更小的指令数据集:LIMA(精选1,000个训练示例)表面可过少数精心策划的指令进行微调

8.4、Evaluating Instruction-tuning Datasets评估指令微调数据集:缺乏开放性和主观性的评估

8.5、Do IT just learn Pattern Copying?IT是否只是学习模式复制?——有论文指出基于IT的显著改进只是捕获表面级别模式而非理解了本质

8.6、Proprietary LLMs Imitation专有LLMs模仿:微调模型能效仿ChatGPT的表达风格,但不等于提升其通用能力→更应注重基模型及指导实例的质量

相关文章

LLMs之Data:指令微调的简介、Self Instruction思想(一种生成指令数据集的方法论—主要用在指令微调阶段)的简介、Alpaca/BELLE应用、实战案例代码实现之详细攻略

2023年8月21日—Paper:《Instruction Tuning for Large Language Models: A Survey—大型语言模型的指令调优的综述》翻译与解读

《Instruction Tuning for Large Language Models: A Survey—大型语言模型的指令调优的综述》翻译与解读

| 地址 |

论文地址:https://arxiv.org/abs/2308.10792 文章地址:Instruction Tuning for Large Language Models: A Survey | Papers With Code 文章地址:Instruction Tuning for Large Language Models: A Survey - AMiner |

| 时间 |

2023年8月21日 |

| 作者 |

浙江大学等 Shengyu Zhang, Linfeng Dong, Xiaoya Li, Sen Zhang Xiaofei Sun, Shuhe Wang, Jiwei Li, Runyi Hu Tianwei Zhang▲, Fei Wu and Guoyin Wang |

Abstract摘要

指令微调技术(增强LLM的能力和可控性,有监督微调+增量训练)、指令对

| This paper surveys research works in the quickly advancing field of instruction tuning (IT), a crucial technique to enhance the capabilities and controllability of large language models (LLMs). Instruction tuning refers to the process of further training LLMs on a dataset consisting of (Instruction, Output) pairs in a supervised fashion, which bridges the gap between the next-word prediction objective of LLMs and the users’ objective of having LLMs adhere to human instructions. In this work, we make a systematic review of the literature, including the general methodology of IT, the construction of IT datasets, the training of IT models, and applications to different modalities, domains and application, along with analysis on aspects that influence the outcome of IT (e.g., generation of instruction outputs, size of the instruction dataset, etc). We also review the potential pitfalls of IT along with criticism against it, along with efforts pointing out current deficiencies of existing strategies and suggest some avenues for fruitful research. |

本文调查了指令微调(IT)领域中的研究工作,这是一种关键技术,用于增强大型语言模型(LLM)的能力和可控性。指令微调是指以监督方式进一步训练LLM,使用由(Instruction, Output)对组成的数据集,从而弥合LLM的下一个词预测目标与用户要求LLM遵循人类指令的目标之间的差距。 在本工作中,我们对文献进行了系统回顾,包括IT的一般方法、IT数据集的构建、IT模型的训练,以及应用于不同形式、领域和应用的应用,以及影响IT结果的因素的分析(例如,指令输出的生成、指令数据集的大小等)。我们还回顾了IT的潜在风险,以及对其的批评,同时还指出了现有策略的当前不足之处,并提出了一些有益的研究方向。 |

1 Introduction引言

LLM显著进展(GPT-3→PaLM→LLaMA)、当前痛点(训练目标与用户目标间的不匹配)、

| The field of large language models (LLMs) has witnessed remarkable progress in recent years. LLMs such as GPT-3 (Brown et al., 2020b), PaLM (Chowdhery et al., 2022), and LLaMA (Touvron et al., 2023a) have demonstrated impressive capabilities across a wide range of natural language tasks (Zhao et al., 2021; Wang et al., 2022b, 2023a; Wan et al., 2023; Sun et al., 2023c; Wei et al., 2023; Li et al., 2023a; Gao et al., 2023a; Yao et al., 2023; Yang et al., 2022a; Qian et al., 2022; Lee et al., 2022; Yang et al., 2022b; Gao et al., 2023b; Ning et al., 2023; Liu et al., 2021b; Wiegreffe et al., 2021; Sun et al., 2023b,a;Adlakha et al., 2023; Chen et al., 2023). One of the major issues with LLMs is the mismatch between the training objective and users’ objective: LLMs are typically trained on minimizing the contextual word prediction error on large corpora; while users want the model to "follow their instructions helpfully and safely" (Radford et al., 2019; Brown et al., 2020a; Fedus et al., 2021; Rae et al., 2021; Thoppilan et al., 2022) |

近年来,大型语言模型(LLM)领域取得了显着进展。诸如GPT-3(Brown等,2020b)、PaLM(Chowdhery等,2022)和LLaMA(Touvron等,2023a)等LLM在各种自然语言任务中展示了令人印象深刻的能力。 LLM的一个主要问题是训练目标与用户目标之间的不匹配:LLM通常在最小化大型语料库上的上下文词预测误差的基础上进行训练,而用户希望模型“有助于并安全地遵循他们的指令”(Radford等,2019;Brown等,2020a;Fedus等,2021;Rae等,2021;Thoppilan等,2022)。 |

提出指令微调技术(解决不匹配)、指令微调的3个好处(弥合误差+为人类提供介入模型行为的渠道+性价比高)

| To address this mismatch, instruction tuning (IT) is proposed, serving as an effective technique to enhance the capabilities and controllability of large language models. It involves further training LLMs using (Instruction, Output) pairs, where INSTRUCTION denotes the human instruction for the model, and OUTPUT denotes the desired output that follows the INSTRUCTION. The benefits of IT are threefold: (1) Finetuning an LLM on the instruction dataset bridges the gap between the next-word prediction objective of LLMs and the users’ objective of instruction following; (2) IT allows for a more controllable and predictable model behavior compared to standard LLMs. The instructions serve to constrain the model’s outputs to align with the desired response characteristics or domain knowledge, providing a channel for humans to intervene with the model’s behaviors; and (3) IT is computationally efficient and can help LLMs rapidly adapt to a specific domain without extensive retraining or architectural changes. |

为了解决这种不匹配,提出了指令微调(IT),作为增强大型语言模型能力和可控性的有效技术。它涉及使用(Instruction, Output)对进一步训练LLM,其中指令表示模型的人类指令,输出表示遵循指令的所需输出。 IT的好处有三个: (1)在指令数据集上微调LLM弥合了LLM的下一个词预测目标与用户遵循指令目标之间的差距; (2)与标准LLM相比,IT允许模型行为更可控和可预测。指令用于限制模型的输出,使其与期望的响应特性或领域知识保持一致,为人类提供介入模型行为的渠道; (3)IT在计算上是高效的,并且可以帮助LLM在不需要大量重新训练或架构更改的情况下迅速适应特定领域。 |

指令微调的3大挑战:高质量性、改善严重依赖数据性、可能只学皮毛性

| Despite its effectiveness, IT also poses challenges: (1) Crafting high-quality instructions that properly cover the desired target behaviors is non-trivial: existing instruction datasets are usually limited in quantity, diversity, and creativity; (2) there has been an increasing concern that IT only improves on tasks that are heavily supported in the IT training dataset (Gudibande et al., 2023); and (3) there has been an intense criticism that IT only captures surface-level patterns and styles (e.g., the output format) rather than comprehending and learning the task (Kung and Peng, 2023). Improving instruction adherence and handling unanticipated model responses remain open research problems. These challenges highlight the importance of further investigations, analysis, and summarization in this field, to optimize the fine-tuning process and better understand the behavior of instruction fine-tuned LLMs. |

尽管其有效性,IT也带来了挑战: (1)制定高质量的指令以正确覆盖所需的目标行为并不容易:现有的指令数据集通常在数量、多样性和创意方面受限; (2)越来越多的人担心,IT只会改善那些在IT训练数据集中得到大量支持的任务(Gudibande et al., 2023); (3)有人强烈批评IT只捕获表面模式和样式(例如,输出格式),而不是理解和学习任务(Kung和Peng,2023)。 改进指令遵循和处理意外模型响应仍然是未解决的研究问题。 这些挑战强调了进一步调查、分析和总结在这一领域的重要性,以优化微调过程并更好地理解经过指令微调的LLM的行为。 |

| In the literature, there has been an increasing research interest in analysis and discussions on LLMs, including pre-training methods (Zhao et al., 2023), reasoning abilities (Huang and Chang, 2022), downstream applications (Yang et al., 2023; Sun et al., 2023b), but rarely on the topic of LLM instruction finetuning. This survey attempts to fill this blank, organizing the most up-to-date state of knowledge on this quickly advancing field. Specifically, >>Section 2 presents the general methodology employed in instruction fine-tuning. >>Section 3 outlines the construction process of commonly-used IT representative datasets. >>Section 4 presents representative instruction- finetuned models. >>Section 5 reviews multi-modality techniques and datasets for instruction tuning, including images, speech, and video. >>Section 6 reviews efforts to adapt LLMs to different domains and applications using the IT strategy. >>Section 7 reviews explorations to make instruction fine-tuning more efficient, reducing the computational and time costs associated with adapting large models. >>Section 8 presents the evaluation of IT models, analysis on them, along with criticism against them. |

在文献中,人们越来越关注对LLM进行分析和讨论,包括预训练方法(Zhao等,2023),推理能力(Huang和Chang,2022),下游应用(Yang等,2023;Sun等,2023b),但很少涉及LLM指令微调这个主题。本调查试图填补这一空白,整理关于这一快速发展领域的最新知识状态。具体而言, 第2节介绍了指令微调中采用的一般方法。 第3节概述了常用IT代表性数据集的构建过程。 第4节介绍了代表性的经过指令微调的模型。 第5节回顾了用于指令微调的多模态技术和数据集,包括图像、语音和视频。 第6节回顾了使用IT策略将LLM调整为不同领域和应用的努力。 第7节回顾了使指令微调更高效的探索,减少与调整大型模型相关的计算和时间成本。 第8节介绍了对IT模型的评估、分析以及对它们的批评。 |

2、Methodology方法

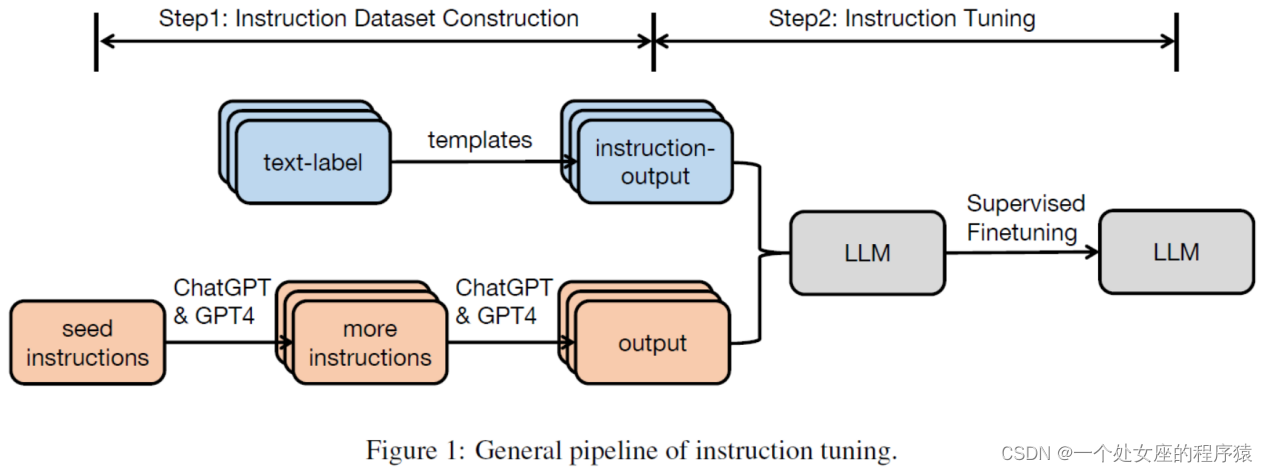

| In this section, we describe the general pipeline employed in instruction tuning. |

在本节中,我们描述了指令微调中采用的一般流程。 |

2.1、Instruction Dataset Construction指令数据集构建:

数据实例三元素:instruction【指定任务】、input【补充上下文】、output【预期输出】

| Each instance in an instruction dataset consists of three elements: an instruction, which is a natural language text sequence to specify the task (e.g., write a thank-you letter to XX for XX, write a blog on the topic of XX, etc); an optional input which provides supplementary information for context; and an anticipated output based on the instruction and the input. |

指令数据集中的每个实例包含三个元素: instruction:一个instruction,是一系列自然语言文本序列,用于指定任务(例如,为XX写一封感谢信,为XX写一篇关于XX主题的博客等); input :可选的input ,为上下文提供补充信息; output:以及基于指令和输入预期的output 。 |

两种方法构建:T1基于现有数据集成策略法(Flan/P3)、T2基于指令收集【手动/自动,如使用LLM的小型手写种子指令进行扩展】采用LLM【如GPT-3.5-Turbo/GPT4】自动生成法(InstructWild/Self-Instruct)

| There are generally two methods for constructing instruction datasets: >>Data integration from annotated natural language datasets. In this approach, (Instruction, Output) pairs are collected from existing annotated natural language datasets by using templates to transform text-label pairs to (Instruction, Output) pairs. Datasets such as Flan (Longpre et al., 2023) and P3 (Sanh et al., 2021) are constructed based on the data integration strategy. >>Generating outputs using LLMs: An alternate way to quickly gather the desired outputs to given instructions is to employ LLMs such as GPT-3.5-Turbo or GPT4 instead of manually collecting the outputs. Instructions can come from two sources: (1) manually collected; or (2) expanded based a small handwritten seed instructions using LLMs. Next, the collected instructions are fed to LLMs to obtain outputs. Datasets such as InstructWild (Xue et al., 2023) and Self-Instruct (Wang et al., 2022c) are geneated following this approach. |

通常有两种方法用于构建指令数据集: >> 基于现有数据集成策略法—从带注释的自然语言数据集中集成数据。在这种方法中,通过使用模板将文本-标签对转换为(Instruction, Output)对,从现有的带注释的自然语言数据集中收集(Instruction, Output)对。Flan(Longpre等,2023)和P3(Sanh等,2021)等数据集是基于数据集集成策略构建的。 >> 采用LLM自动生成法—使用LLM生成输出:一种快速获取给定指令所需输出的替代方法是使用LLM,例如GPT-3.5-Turbo或GPT4,而不是手动收集输出。指令可以来自两个来源:(1)手动收集;或(2)使用LLM扩展基于小型手写种子指令。接下来,收集到的指令被输入LLM以获得输出。InstructWild(Xue等,2023)和Self-Instruct(Wang等,2022c)等数据集是按照这种方法生成的。 |

多轮对话微调数据集:让LLM扮演两个对立角色来生成

| For multi-turn conversational IT datasets, we can have large language models self-play different roles (user and AI assistant) to generate messages in a conversational format (Xu et al., 2023b). |

对于多轮对话型的指令微调数据集,我们可以让大型语言模型扮演不同角色(用户和AI助手),以生成对话格式的消息(Xu等,2023b)。 |

2.2、Instruction Tuning指令微调:有监督的训练

| Based on the collected IT dataset, a pretrained model can be directly fune-tuned in a fully- supervised manner, where given the instruction and the input, the model is trained by predicting each token in the output sequentially. |

基于收集到的指令微调数据集,可以以完全监督的方式直接微调预训练模型,其中在给定指令和输入的情况下,模型通过逐个预测输出中的每个令牌来进行训练。 |

3、Datasets数据集:大多都是英文指令,Natural Instructions/Unnatural Instructions/Super-Natural Instructions、P3/xP3、Flan 2021、Self-Instruct、Evol-Instruct、LIMA、Dolly、OpenAssistant Conversations、Baize

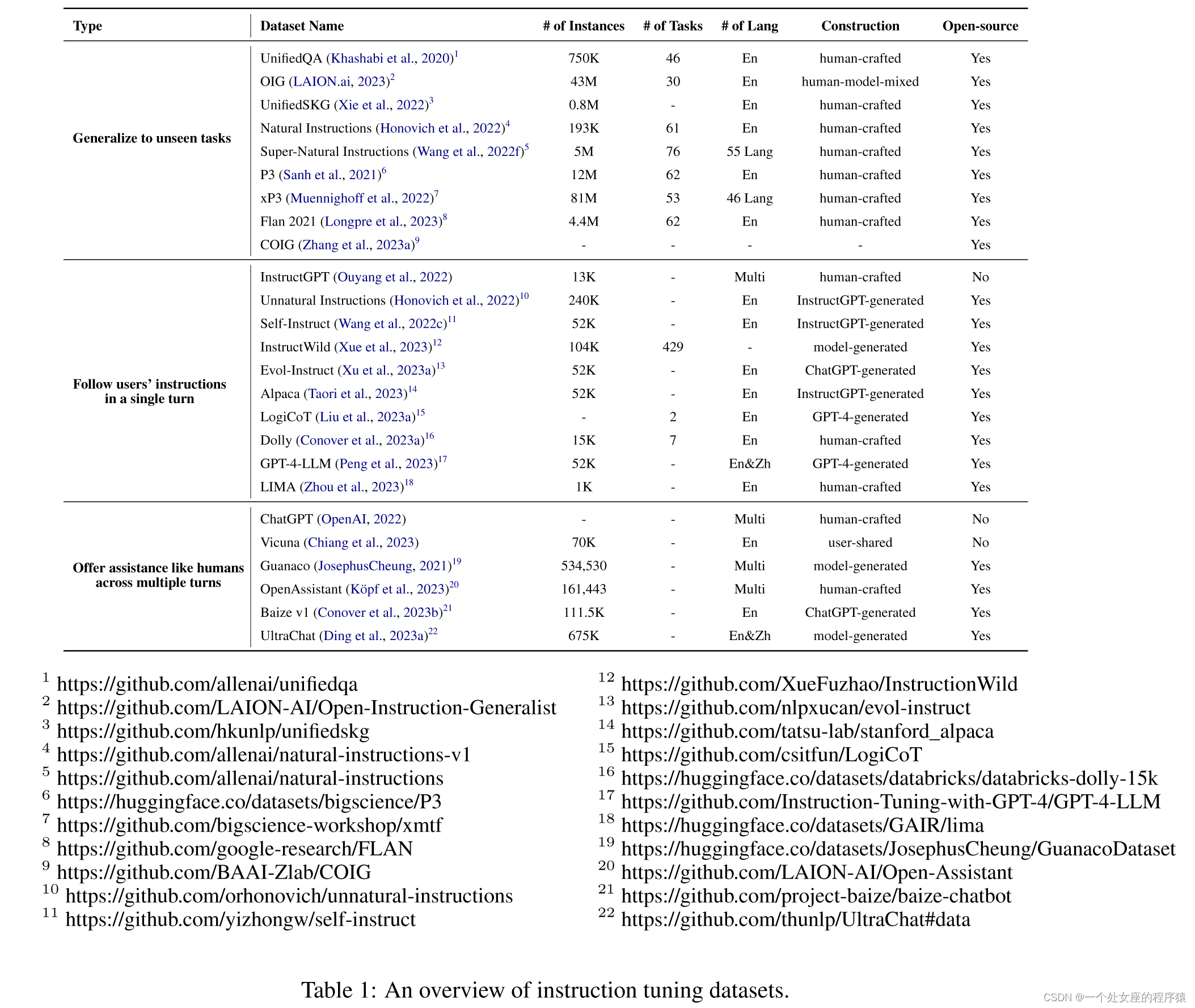

| In this section, we detail widely-used instruction tuning datasets in the community. Table 1 gives an overview of the datasets. |

在本节中,我们详细介绍了社区中广泛使用的指令微调数据集。表格1提供了数据集的概述。 |

3.1、Natural Instructions自然指令:来自193K个实例和61个NLP任务,2元组{输入,输出}

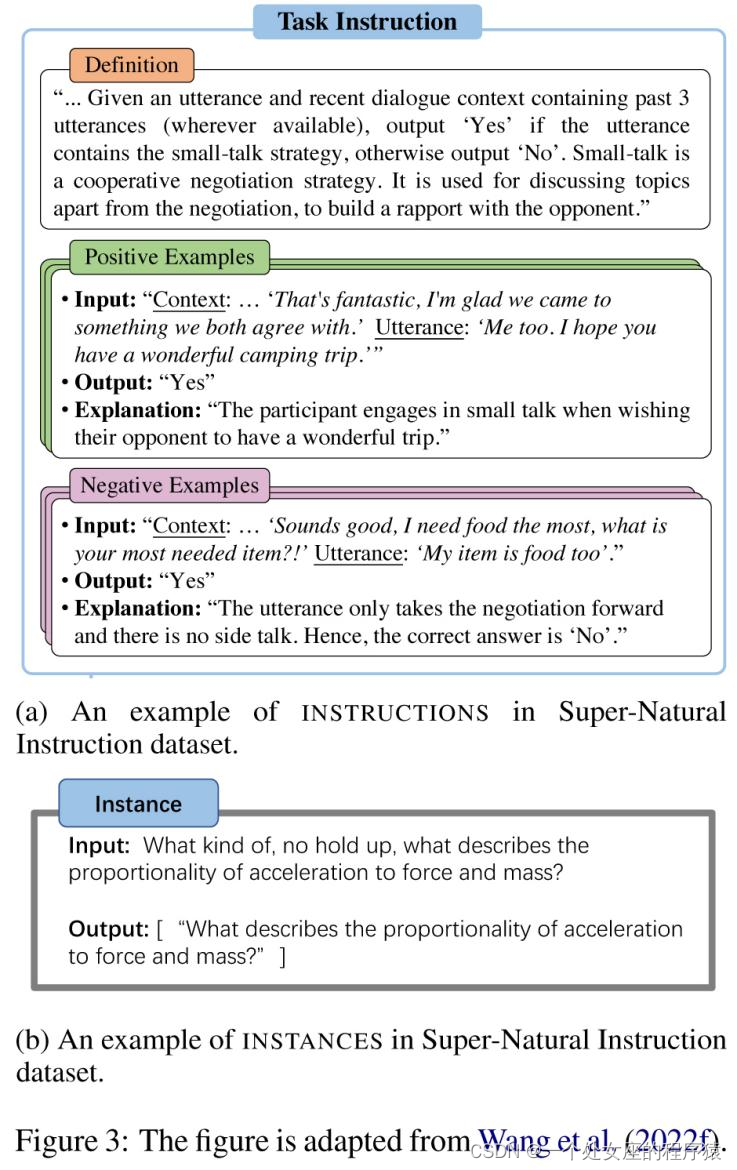

| Natural Instructions (Mishra et al., 2021) is a human-crafted English instruction dataset consisting of 193K instances, coming from 61 distinct NLP tasks. The dataset is comprised of "instructions" and "instances". Each instance in the "instructions" is a task description consisting of 7 components: title, definition, things to avoid emphasis/caution, prompt, positive example, and negative example. Subfigure (a) in Figure 2 gives an example of the "instructions". "Instances" consists of ("input", "output") pairs, which are the input data and textual result that follows the given instruction correctly. Subfigure (b) in Figure 2 gives an example of the instances. The data comes from existing NLP datasets of 61 tasks. The authors collected the "instructions" by referring to the dataset annotating instruction file. Next, the authors constructed the "instances" by unifying data instances across all NLP datasets to ("input", "output") pairs. |

Natural Instructions(Mishra等,2021)是一个人工创建的英语指令数据集,包含了193K个实例,来自61个不同的自然语言处理任务。数据集由“指令”和“实例”组成。 在“指令”中,每个实例是一个任务描述,包括7个组成部分:标题、定义、避免强调/注意事项、提示、正面示例和负面示例。 图2(a)中的子图示例展示了“指令”的一个示例。而“实例”由(“输入”,“输出”)对组成,即输入数据和按照给定指令正确生成的文本结果。图2(b)中的子图示例展示了“实例”的一个示例。 这些数据来自61个任务的现有自然语言处理数据集。作者通过参考数据集的指令注释文件来收集“指令”。接下来,作者通过将所有NLP数据集中的数据实例统一为(“输入”,“输出”)对来构建“实例”。 |

3.2、P3公共提示池:整合170个英语NLP数据集和2052个英语提示,三元组{“输入”【描述任务】+“答案选择”【响应列表】+“目标”【正确响应】}

| P3 (Public Pool of Prompts) (Sanh et al., 2021) is an instruction fine-tuning dataset constructed by integrating 170 English NLP datasets and 2,052 English prompts. Prompts, which are sometimes named task templates, are functions that map a data instance in a conventional NLP task (e.g., question answering, text classification) to a natural language input-output pair. Each instance in P3 has three components: "inputs", "answer_choices", and “targets". "Inputs" is a sequence of text that describes the task in natural language (e.g., "If he like Mary is true, is it also true that he like Mary’s cat?"). "Answer choices" is a list of text string that are applicable responses to the given task (e.g., ["yes", "no", "undetermined"]). "Targets" is a text string that is the correct response to the given "inputs" (e.g., "yes"). The authors built PromptSource, a tool for creating high-quality prompts collaboratively and an archive for open-sourcing high-quality prompts. the P3 dataset was built by randomly sampling a prompt from multiple prompts in the PromptSource and mapping each instance into a ("inputs", "answer choices", "targets") triplet. |

P3(Public Pool of Prompts)(Sanh等,2021)是一个指令微调数据集,通过整合170个英语自然语言处理数据集和2052个英语提示来构建。提示有时被称为任务模板,是一种将传统自然语言处理任务(例如,问题回答、文本分类)的数据实例映射到自然语言输入-输出对的功能。 P3中的每个实例有三个组成部分:“输入”,“答案选择”和“目标”。 “输入”是一系列以自然语言描述任务的文本序列(例如,“如果他喜欢玛丽是真的,那么他是否也喜欢玛丽的猫?”)。 “答案选择”是一个文本字符串列表,是给定任务的适用响应(例如,“是”,“否”,“不确定”)。 “目标”是文本字符串,是给定“输入”的正确响应(例如,“是”)。 作者构建了PromptSource,这是一个协作创建高质量提示的工具,也是一个开源高质量提示的存档。P3数据集是通过从PromptSource中随机抽样选择一个提示,将每个实例映射为一个(“输入”,“答案选择”,“目标”)三元组而构建的。 |

3.3、xP3跨语言公共提示池:46种语言中16类NLP任务,2元组{输入和目标}

| xP3 (Crosslingual Public Pool of Prompts) (Muennighoff et al., 2022) is a multilingual instruction dataset consisting of 16 diverse natural language tasks in 46 languages. Each instance in the dataset has two components: "inputs" and "targets". "Inputs" is a task description in natural language. "Targets" is the textual result that follows the "inputs" instruction correctly. The original data in xP3 comes from three sources: the English instruction dataset P3, 4 English unseen tasks in P3 (e.g., translation, program synthesis), and 30 multilingual NLP datasets. The authors built the xP3 dataset by sampling human-written task templates from PromptSource and then filling templates to transform diverse NLP tasks into a unified formalization. For example, a task template for the natural language inference task is as follows: “If Premise is true, is it also true that Hypothesis?”; "yes", "maybe", no" with respect to the original task labels "entailment (0)", "neutral (1)" and "contradiction (2)". |

xP3(Crosslingual Public Pool of Prompts)(Muennighoff等,2022)是一个多语言指令数据集,包含46种语言中16个不同的自然语言处理任务。 数据集中的每个实例有两个组成部分:“输入”和“目标”。 “输入”是自然语言中的任务描述。 “目标”是按照“输入”指令正确生成的文本结果。 xP3中的原始数据来自三个来源:英语指令数据集P3,P3中的4个英语未见过的任务(例如,翻译、程序合成)以及30个多语言自然语言处理数据集。作者通过从PromptSource中随机抽样选择人工编写的任务模板,然后填充模板,将不同的自然语言处理任务转换为统一的形式,从而构建了xP3数据集。 |

3.4、Flan 2021:将63个NLP基准转换为输入-输出对进而构建,2元组{输入+目标}

| Flan 2021 (Longpre et al., 2023) is an English instruction dataset constructed by transforming 62 widely-used NLP benchmarks (e.g., SST-2, SNLI, AG News, MultiRC) into language input- output pairs. Each instance in the Flan 2021 has "input" and "target" components. "Input" is a sequence of text that describes a task via a natural language instruction (e.g., "determine the sentiment of the sentence ’He likes the cat.’ is positive or negative?"). "Target" is a textual result that executes the "input" instruction correctly (e.g., "positive"). The authors transformed conventional NLP datasets into input-target pairs by: Step 1: manually composing instruction and target templates; Step 2: filling templates with data instances from the dataset. |

Flan 2021(Longpre等,2023)是一个英语指令数据集,通过将62个广泛使用的自然语言处理基准(例如,SST-2、SNLI、AG News、MultiRC)转换为语言输入-输出对来构建。Flan 2021中的每个实例包含“输入”和“目标”两个组成部分。“输入”是描述任务的自然语言指令序列(例如,“确定句子'他喜欢猫。'的情感是积极还是消极?”)。 “目标”是正确执行“输入”指令的文本结果(例如,“积极”)。作者通过以下步骤将传统的自然语言处理数据集转换为输入-目标对: 步骤1:手动组合指令和目标模板; 步骤2:使用数据集中的数据实例填充模板。 |

3.5、Unnatural Instructions非自然指令:基于InstructGPT构建的24万个实例,4元组{指令+输入+约束+输出}

| Unnatural Instructions (Honovich et al., 2022) is an instruction dataset with approximately 240,000 instances, constructed using InstructGPT (text- davinci-002) (Ouyang et al., 2022). Each instance in the dataset has four components: INSTRUCTION, INPUT, CONSTRAINTS, and OUTPUT. Instruction" is a description of the instructing task in natural language. "Input" is an argument in natural language that instantiates the instruction task. |

非自然指令(Honovich等,2022)是一个包含约24万个实例的指令数据集,使用InstructGPT(text-davinci-002)(Ouyang等,2022)构建而成。数据集中的每个实例有四个组成部分:指令、输入、约束和输出。 “指令”是自然语言中的指令任务描述。 “输入”是实例化指令任务的自然语言参数。 |

3.6、Self-Instruct

LLMs之Data:指令微调的简介、Self Instruction思想(一种生成指令数据集的方法论—主要用在指令微调阶段)的简介、Alpaca/BELLE应用、实战案例代码实现之详细攻略

包含基于InstructGPT的52K个训练指令和252个评估指令,3元组{ “指令”【定义任务】+“输入”【指令的内容补充】+“输出”【正确结果】}

| Self-Instruct (Wang et al., 2022c) is an English instruction dataset with 52K training instructions and 252 evaluation instructions, constructed using InstructGPT (Ouyang et al., 2022). Each data instance consists of "instruction", "input" and "output". "Instruction" is a task definition in natural language (e.g., "Please answer the following question."). "Input" is optional and is used as supplementary content for the instruction (e.g., "Which country’s capital is Beijing?"), and "output" is the textual result that follows the instruction correctly (e.g., "Beijing"). |

自我指导(Self-Instruct)(Wang等,2022c)是一个英语指令数据集,包含52K个训练指令和252个评估指令,使用InstructGPT(Ouyang等,2022)构建而成。每个数据实例包括“指令”、“输入”和“输出”三个部分。 “指令”是自然语言中的任务定义(例如,“请回答以下问题。”)。 “输入”是可选的,用作指令的补充内容(例如,“哪个国家的首都是北京?”),而“输出”是正确遵循指令生成的文本结果(例如,“北京”)。 |

生成四步骤:构建示例(175个种子任务来抽样8个自然语言指令)来提示InstructGPT生成更多指令→判断是否分类任务+基于给定的“指令”提示InstructGPT生成“输入”再结合生成“输出”→为相应的指令任务生成“输入”和“输出”→后处理(过滤和删除重复)→最终得到52K个英语指令

| The full dataset is generated based on the following steps: Step 1. The authors randomly sampled 8 natural language instructions from the 175 seed tasks as examples and prompted InstructGPT to generate more task instructions. Step 2. The authors determined whether the instructions generated in Step 1 is a classification task. If yes, they asked InstructGPT to generate all possible options for the output based on the given instruction and randomly selected a particular output category to prompt InstructGPT to generate the corresponding "input" content. For Instructions that do not belong to a classification task, there should be countless "output" options. The authors proposed to use the Input-first strategy, where InstructGPT was prompted to generate the "input" based on the given "instruction" first and then generate the "output" according to the "instruction" and the generated "input". Step 3. Based on results of step-2, the authors used InstructGPT to generate the "input" and "output" for corresponding instruction tasks using the output-first or input-first strategy. Step 4. The authors post-processed (e.g., filtering out similar instructions and removing duplicate data for input and output) the generated instruction tasks and got a final number of 52K English instructions. |

整个数据集是通过以下步骤生成的: 步骤1:作者随机从175个种子任务中抽样8个自然语言指令作为示例,并提示InstructGPT生成更多的任务指令。 步骤2:作者确定步骤1中生成的指令是否是分类任务。如果是,他们要求InstructGPT基于给定的指令生成所有可能的输出选项,并随机选择一个特定的输出类别,以促使InstructGPT生成相应的“输入”内容。对于不属于分类任务的指令,应该有无数个“输出”选项。作者提出了首先生成“输入”的策略,即首先基于给定的“指令”提示InstructGPT生成“输入”,然后根据“指令”和生成的“输入”生成“输出”。 步骤3:根据步骤2的结果,作者使用InstructGPT基于输出优先或输入优先策略为相应的指令任务生成“输入”和“输出”。 步骤4:作者对生成的指令任务进行后处理(例如,过滤相似指令,删除输入和输出的重复数据),得到最终的52K个英语指令。 |

3.7、Evol-Instruct:包含基于ChatGPT采用进化策略(添加约束、增加推理步骤、复杂化输入等)构建的52K个训练指令和218个评估指令,二元组{ instruction, response}

形成过程:基于52K的初始集→随机选择1个进化策略让ChatGPT重写指令→过滤未进化的指令对(利用ChatGPT和规则)→利用新生成进化指令对更新数据集→重复上述四次→收集了25万个指令对

| Evol-Instruct (Xu et al., 2023a) is an English instruction dataset consisting of a training set with 52K instructions and an evaluation set with 218 instructions. The authors prompted ChatGPT (OpenAI, 2022) to rewrite instructions using the in-depth and in-breath evolving strategies. The in-depth evolving strategy contains five types of operations, e.g., adding constraints, increasing reasoning steps, complicating input and etc. The in-breath evolving strategy upgrades the simple instruction to a more complex one or directly generates a new instruction to increase diversity. The authors first used 52K (instruction, response) pairs as the initial set. Then they randomly sampled an evolving strategy and asked ChatGPT to rewrite the initial instruction based on the chosen evolved strategy. The author employed ChatGPT and rules to filter out no-evolved instruction pairs and updated the dataset with newly generated evolved instruction pairs. After repeating the above process 4 times, the authors collected 250K instruction pairs. Besides the train set, the authors collected 218 human-generated instructions from real scenarios (e.g., open-source projects, platforms, and forums), called the Evol- Instruct test set. |

Evol-Instruct(Xu等,2023a)是一个英语指令数据集,包含一个包含52K个训练指令和218个评估指令的训练集。作者使用ChatGPT(OpenAI,2022)以深入和全面的进化策略重写指令来构建这个数据集。深入进化策略包含五种类型的操作,例如添加约束、增加推理步骤、复杂化输入等。全面进化策略将简单指令升级为更复杂的指令,或直接生成新的指令以增加多样性。 作者首先使用52K个 (instruction, response)对作为初始集。然后随机选择一个进化策略,要求ChatGPT根据选择的进化策略重写初始指令。作者使用ChatGPT和规则来过滤掉未进化的指令对,并使用新生成的进化指令对更新数据集。在重复上述过程4次之后,作者收集了25万个指令对。除了训练集之外,作者还从真实场景(例如,开源项目、平台和论坛)中收集了218个人工生成的指令,称为Evol-Instruct测试集。 |

3.8、LIMA:包含1K数据实例的训练集(75%源自3个社区问答网站)和300个实例的测试集,二元组{instruction, response}

| LIMA (Zhou et al., 2023) is an English instruction dataset consisting of a train set with 1K data instances and a test set with 300 instances. The train set contains 1K ("instruction", "response") pairs. For the training data, 75% are sampled from three community question & answers websites (i.e., Stack Exchange, wikiHow, and the Pushshift Reddit Dataset (Baumgartner et al., 2020)); 20% are manually written by a set of the authors (referred Group A) inspired by their interests; 5% are sampled from the Super-Natural Instructions dataset (Wang et al., 2022d). As for the valid set, the authors sampled 50 instances from the Group A author-written set. The test set contains 300 examples, with 76.7% written by another group (Group B) of authors and 23.3% sampled from the Pushshift Reddit Dataset (Baumgartner et al., 2020), which is a collection of questions & answers within the Reddit community. |

LIMA(Zhou等,2023)是一个英语指令数据集,包含一个包含1K个数据实例的训练集和一个包含300个实例的测试集。训练集包含1K个(instruction, response)对。对于训练数据,其中75%来自三个社区问答网站(即Stack Exchange、wikiHow和Pushshift Reddit数据集(Baumgartner等,2020));20%由一组作者(Group A)手动编写,受到他们兴趣的启发;5%来自Super-Natural Instructions数据集(Wang等,2022d)。至于验证集,作者从Group A作者编写的集合中抽样了50个实例。测试集包含300个示例,其中76.7%由另一组作者(Group B)编写,23.3%来自Pushshift Reddit数据集(Baumgartner等,2020),这是Reddit社区中的问题和回答的集合。 |

3.9、Super-Natural Instructions超级自然指令:包含1616个NLP任务和500万个任务实例+涵盖76种任务类型和55种语言,二元组(“指令”和“任务实例”)

| Super Natural Instructions (Wang et al., 2022f) is a multilingual instruction collection composed of 1,616 NLP tasks and 5M task instances, covering 76 distinct task types (e.g., text classification, information extraction, text rewriting, text composition and etc.) and 55 languages. Each task in the dataset consists of an "instruction" and "task instances". Specifically, "instruction" has three components: a "definition" that describes the task in natural language; "positive examples" that are samples of inputs and correct outputs, along with a short explanation for each; and "negative examples" that are samples of inputs and undesired outputs, along with a short explanation for each, as shown in Figure 2 (a). "Task instances" are data instances comprised of textual input and a list of acceptable textual outputs, as shown in Figure 2 (b). The original data in Super Natural Instructions comes from three sources: (1) existing public NLP datasets (e.g., CommonsenseQA); (2) applicable intermediate annotations that are generated through a crowdsourcing process (e.g., paraphrasing results to a given question during a crowdsourcing QA dataset); (3) synthetic tasks that are transformed from symbolic tasks and rephrased in a few sentences (e.g., algebraic operations like number comparison). |

超级自然指令(Super Natural Instructions)(Wang等,2022f)是一个多语言指令收集,包含1616个自然语言处理任务和500万个任务实例,涵盖76种不同的任务类型(例如,文本分类、信息提取、文本改写、文本组成等)和55种语言。数据集中的每个任务包括“指令”和“任务实例”两个部分。 具体来说,“指令”有三个组成部分:以自然语言描述任务的“定义”;“正面示例”,它是输入和正确输出的示例,每个示例都附有简短的解释;“负面示例”,它是输入和不希望的输出的示例,每个示例都附有简短的解释,如图2(a)所示。 “任务实例”是由文本输入和可接受的文本输出列表组成的数据实例,如图2(b)所示。 超级自然指令中的原始数据来自三个来源:(1)现有的公共自然语言处理数据集(例如,CommonsenseQA);(2)通过众包过程生成的适用中间注释(例如,在众包问答数据集中对给定问题进行释义);(3)从符号任务转换而来且经过重新表述的合成任务,这些任务在几句话中重新表述(例如,代数运算,如数字比较)。 |

3.10、Dolly:包含15000个人工生成英语指令+7种特定类型

| Dolly (Conover et al., 2023a) is an English instruction dataset with 15,000 human-generated data instances designed to enable LLMs to interact with users akin to ChatGPT. The dataset is designed for simulating a wide range of human behaviors, covering 7 specific types: open Q&A, closed Q&A, extracting information from Wikipedia, summarizing information from Wikipedia, brainstorming, classification, and creative writing. Examples of each task type in the dataset are shown in Table 2. |

Dolly(Conover等,2023a)是一个包含15000个人工生成的数据实例的英语指令数据集,旨在使大型语言模型能够与用户进行类似于ChatGPT的互动。该数据集旨在模拟各种人类行为,涵盖7种特定类型:开放式问答、封闭式问答、从维基百科中提取信息、从维基百科中总结信息、头脑风暴、分类和创意写作。数据集中每种任务类型的示例如表2所示。 |

3.11、OpenAssistant Conversations

包含158K条消息(90K个用户提示+68K个助手回复),35种语言中65K个对话树+450K个人工注释的质量评分,对话树(节点,路径/线程)

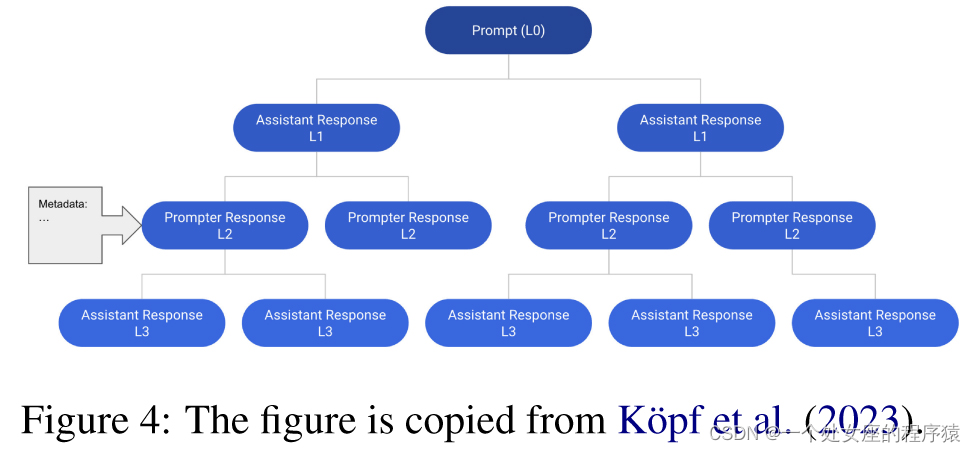

| OpenAssistant Conversations (Köpf et al., 2023) is a human-crafted multilingual assistant-style conversation corpus consisting of 161,443 messages (i.e., 91,829 user prompts, 69,614 assistant replies) from 66,497 conversation trees in 35 languages, along with 461,292 human-annotated quality ratings. Each instance in the dataset is a conversation tree (CT). Specifically, each node in a conversation tree denotes a message generated by roles (i.e., prompter, assistant) in the conversation. A CT’s root node represents an initial prompt from the prompter, while other nodes denote replies from a prompter or an assistant. A path from the root to any node in a CT represents a valid conversation between the prompter and assistant in turns and is referred to as a thread. Figure 4 shows an example of a conversation tree consisting of 12 messages in 6 threads. |

OpenAssistant Conversations(Köpf等,2023)是一个人工创建的多语言助手风格对话语料库,包含161443条消息(即91829个用户提示,69614个助手回复),来自35种语言中66497个对话树,同时还包含461292个人工注释的质量评分。 数据集中的每个实例是一个对话树(CT)。具体来说,对话树中的每个节点表示会话中角色(即提示者、助手)生成的消息。CT的根节点表示提示者的初始提示,而其他节点表示提示者或助手的回复。从根节点到CT中任何节点的路径表示提示者和助手之间的有效会话,称为线程。图4显示了一个由12条消息组成的对话树的示例,其中包含6个线程。 |

五步流程收集对话树:提示者→标记提示→扩展树节点→标记回复→排名

| The authors first collected conversation trees based on the five-step pipeline: Step 1. prompting: contributors performed as the prompter and crafted initial prompts; Step 2. labeling prompts: contributors rated scores to initial prompts from step 1, and the authors chose high-quality prompts as root nodes with a balanced sampling strategy; Step 3. expanding tree nodes: contributors added reply messages as prompter or assistant; Step 4. labeling replies: contributors assigned scores to existing node replies; Step 5. ranking: contributors ranked assistant replies referring to the contributor guidelines. The tree state machine managed and tracked the state (e.g., initial state, growing state, end state) throughout the conversation crafting process. Subsequently, the OpenAssistant Conversations dataset was built by filtering out offensive and inappropriate conversation trees. |

作者首先根据以下五步流程收集了对话树: 步骤1:提示者:贡献者扮演提示者的角色,创建初始提示; 步骤2:标记提示:贡献者对步骤1中的初始提示进行评分,作者使用平衡的抽样策略选择高质量的提示作为根节点; 步骤3:扩展树节点:贡献者添加提示者或助手的回复消息; 步骤4:标记回复:贡献者对现有节点的回复分配分数; 步骤5:排名:贡献者根据贡献者指南对助手的回复进行排名。 树状态机在整个对话创作过程中管理和跟踪状态(例如,初始状态、增长状态、结束状态)。随后,通过过滤掉冒犯性和不适当的对话树,构建了OpenAssistant Conversations数据集。 |

3.12、Baize:基于ChatGPT(self-chat思想)构建的111.5K个实例多轮(3.4轮)聊天语料库,二元组{ prompt,response}

| Baize (Conover et al., 2023b) is an English multi- turn chat corpus with 111.5K instances constructed using ChatGPT. And each turn consists of a user’s prompt and a response from the assistant. Each instance in Baize v1 contains 3.4 turns of conversations. To create the Baize dataset, the authors proposed self-chat, where ChatGPT plays roles of the user and the AI assistant in turns and generates messages in a conversational format. Specifically, the authors first crafted a task template that defines the roles and tasks for ChatGPT (as shown in Table 3). Next, they sampled questions (e.g., "How do you fix a Google Play Store account that isn’t working?") from Quora and Stack Overflow datasets as conversation seeds (e.g., topics). Subsequently, they prompted ChatGPT with the template and the sampled seed. ChatGPT continuously generates messages for both sides until a natural stopping point is reached. |

Baize(Conover等,2023b)是一个包含111.5K个实例的英语多轮聊天语料库,使用ChatGPT构建。每个轮次包括用户的提示和助手的回复。Baize v1中的每个实例包含3.4轮的对话。 为了创建Baize数据集,作者提出了自我对话的概念,其中ChatGPT在轮流扮演用户和AI助手的角色,以会话格式生成消息。具体来说,作者首先创建了一个任务模板,定义了ChatGPT的角色和任务(如表3所示)。接下来,他们从Quora和Stack Overflow数据集中抽样问题(例如,“如何修复不工作的Google Play Store账户?”)作为会话种子(例如,话题)。随后,他们使用模板和抽样的种子提示ChatGPT。ChatGPT持续地为双方生成消息,直到达到自然停止点为止。 |

4、Instruction Fine-tuned LLMs指导微调的LLM模型

| In this section, we detail widely-used LLM models in the community that are trained through instruction fine-tuning. |

在本节中,我们详细介绍社区中广泛使用的通过指导微调训练的LLM模型。 |

4.1、InstructGPT:基于GPT-3模型+人类指导微调

LLMs之InstructGPT:《Training language models to follow instructions with human feedback》翻译与解读

微调三步骤(基于人类筛选指令进行SFT→基于一个instruction多个降序的responses来训练RM模型→利用RL的PPO策略优化RM模型)

| InstructGPT (176B) (Ouyang et al., 2022) is initialized with GPT-3 (176B) (Brown et al., 2020b) and then fine-tuned on human instructions. The fine-tuning procedure is composed of the following three steps: (1) supervised fine-tuning (SFT) on the human-filtered instruction dataset, which is collected from Playground API history records; (2) training a reward model to predict human preferences based on an annotated dataset, which is constructed though human labors by sampling multiple responses for one instruction and rank them from the best to the worst; (3) further optimizing the model from Step 1 with new instructions and the trained reward model in step (2). Parameters are updated using the proximal policy optimization (PPO) (Schulman et al., 2017) method, a policy gradient reinforcement learning method. Steps (2) and (3) are alternated multiple times until the model performance does not significantly improve. |

InstructGPT(176B)(Ouyang等,2022)以GPT-3(176B)(Brown等,2020b)为初始模型,然后在人类指导下进行微调。 微调过程包括以下三个步骤: (1)在人类筛选的指令数据集上进行监督微调(SFT),该数据集从Playground API历史记录中收集; (2)训练奖励模型以预测人类偏好,基于通过人工劳动采样的带注释数据集,该数据集为一个指令采样多个响应,并将其从最佳到最差进行排序; (3)使用步骤(2)中训练的奖励模型从步骤1中的模型和新指令进一步优化。参数使用近端策略优化(PPO)(Schulman等,2017)方法进行更新,这是一种策略梯度强化学习方法。步骤(2)和(3)多次交替进行,直到模型性能不再显著提高为止。 |

InstructGPT的真实性、毒性、模型性能等表现非常出色

| Overall, InstructGPT outperforms GPT-3. For automatic evaluations, InstructGPT outperforms GPT-3 by 10% on the TruthfulQA (Lin et al., 2021) dataset in terms of truthfulness and by 7% on the RealToxicityPrompts (Gehman et al., 2020) in terms of toxicity. On NLP datasets (i.e., WSC), InstructGPT achieves comparable performance to GPT-3. For human evaluations, regarding four different aspects, including following correct instructions, following explicit constraints, fewer hallucinations, and generating appropriate responses, InstructGPT outperforms GPT-3 +10%, +20%, -20%, and +10%, respectively. |

总体而言,InstructGPT在真实性QA数据集(Lin等,2021)方面比GPT-3表现出色,真实性方面提高了10%,在RealToxicityPrompts数据集—即评估生成文本模型的毒性(Gehman等,2020)方面提高了7%。在自然语言处理数据集(例如WSC)上,InstructGPT的性能与GPT-3相当。在人类评估方面,涉及遵循正确指令、遵循明确约束、幻觉较少以及生成适当响应等四个不同方面,InstructGPT分别优于GPT-3 +10%、+20%、-20%和+10%。 |

4.2、BLOOMZ:基于BLOOM模型+指令数据集xP3,多种任务及其数据集上表现均超于BLOOM

LLMs:《BLOOM: A 176B-Parameter Open-Access Multilingual Language Model》翻译与解读

LLMs:《BLOOM: A 176B-Parameter Open-Access Multilingual Language Model》翻译与解读_一个处女座的程序猿的博客-程序员宅基地

| BLOOMZ (176B) (Muennighoff et al., 2022) is initialized with BLOOM (176B) (Scao et al., 2022), and then fine-tuned on the instruction dataset xP3 (Muennighoff et al., 2022), a collection of human-instruction datasets in 46 languages, coming from two sources: (1) P3, which is a collection of (English instruction, English response) pairs; and (2) an (English instruction, Multilingual response) set which is transformed from multilingual NLP datasets (e.g., Chinese benchmarks) by filling task templates with pre- defined English instructions. For automatic evaluation, BLOOMZ performs better than BLOOM in the zero-shot setting by +10.4%, 20.5%, and 9.8% on coreference resolution, sentence completion and natural language inference datasets, respectively. For the HumanEval benchmark (Chen et al., 2021), BLOOMZ outperforms BLOOM by 10% in terms of the Pass@100 metric. For generative tasks, BLOOMZ receives +9% BLEU improvement compared to BLOOM on the lm-evaluation-harness benchmark. |

BLOOMZ(176B)(Muennighoff等,2022)以BLOOM(176B)(Scao等,2022)为初始模型,然后在指令数据集xP3(Muennighoff等,2022)上进行微调。xP3是一个包含46种语言的人类指令数据集的集合,来自两个来源: (1)P3,其中包含(英文指令,英文响应)对; (2)一个(英文指令,多语言响应)集,通过在多语言自然语言处理数据集(例如中文基准)中使用预定义的英文指令填充任务模板而转化而来。 对于自动评估,BLOOMZ在zero-shot设置下在共指消解、句子补全和自然语言推理数据集上分别比BLOOM提高了10.4%、20.5%和9.8%。对于HumanEval基准(Chen等,2021),BLOOMZ在Pass@100度量上优于BLOOM 10%。对于生成任务,BLOOMZ在lm-evaluation-harness基准上比BLOOM的BLEU分数提高了9%。 |

"Pass@100" 是一种评估指标,用于衡量生成式模型在生成任务中的性能。通常,生成式模型会根据输入生成相应的文本输出。

T1、BLEU指标:在文本生成任务中,一种评估方式是将生成的文本与人工提供的参考文本进行比较,以测量生成文本的质量。"BLEU"(Bilingual Evaluation Understudy,双语评估候补)是一种常用的自动评估指标,用于衡量生成文本与参考文本之间的相似性。

T2、Pass@K指标:而在生成式任务中,尤其是类似问答任务中,还有一些其他的评估指标,如"Pass@K",其中 K 代表一个特定的数值,表示模型生成的回答是否在前 K 个候选中。例如,"Pass@100" 意味着模型生成的回答是否在前100个候选中。

4.3、Flan-T5:基于T5模型+FLAN数据集微调,基于JAX的T5X框架+128*TPU v4=37小时

| Flan-T5 (11B) is is a large language model initialized with T5 (11B) (Raffel et al., 2019), and then fine-tuned on the FLAN dataset (Longpre et al., 2023). The FLAN dataset is a collection of (instruction, pairs) pairs, constructed from 62 datasets of 12 NLP tasks (e.g., natural language inference, commonsense reasoning, paraphrase generation) by filling templates with various instructions under a unified task formalization. During fine-tuning, FLAN-T5 adapts the JAX- based T5X framework and selects the best model evaluated on the held-out tasks every 2k step. Compared with T5’s pre-training stage, fine-tuning costs 0.2% computational resources (approximately 128 TPU v4 chips for 37 hours). For evaluation, FLAN-T5 (11B) outperforms T5 (11B), and achieves comparable results to larger models, including PaLM (60B) (Chowdhery et al., 2022) in the few-shot setting. FLAN- T5 outperforms T5 by +18.9%, +12.3%, +4.1%, +5.8%, +2.1%, and +8% on MMLU (Hendrycks et al., 2020), BBH (Suzgun et al., 2022), TyDiQA (Clark et al., 2020), MGSM (Shi et al., 2022), open-ended generation, and RealToxicityPrompts (Gehman et al., 2020), respectively. In few-shot settings, FLAN-T5 outperforms PaLM +1.4% and +1.2% on the BBH and TyDiQA datasets. |

Flan-T5(11B)是一种大型语言模型,其初始化采用T5(11B)(Raffel等,2019)并在FLAN数据集(Longpre等,2023)上进行微调。FLAN数据集是一个包含(instruction, pairs)对的集合,通过在统一任务规范下使用各种指令填充模板,从12个自然语言处理任务的62个数据集构建而成(例如,自然语言推理、常识推理、释义生成)。 在微调过程中,FLAN-T5采用基于JAX的T5X框架,并在每2k步时选择在预留任务上评估的最佳模型。与T5的预训练阶段相比,微调过程消耗0.2%的计算资源(大约128个TPU v4芯片,耗时37小时)。 对于评估,FLAN-T5(11B)优于T5(11B),在少样本设置中实现了与更大模型(如PaLM(60B)(Chowdhery等,2022))相当的结果。FLAN-T5在MMLU(Hendrycks等,2020)、BBH(Suzgun等,2022)、TyDiQA(Clark等,2020)、MGSM(Shi等,2022)、开放式生成以及RealToxicityPrompts(Gehman等,2020)方面分别优于T5 +18.9%、+12.3%、+4.1%、+5.8%、+2.1%和+8%。在少样本设置中,FLAN-T5在BBH和TyDiQA数据集上分别优于PaLM +1.4%和+1.2%。 |

4.4、Alpaca:基于LLaMA模型+利用InstructGPT生成指令数据集进行微调,8*A100-80G设备+混合精度AMP+DP=3小时

LLMs之Alpaca:《Alpaca: A Strong, Replicable Instruction-Following Model》翻译与解读

LLMs之Alpaca:《Alpaca: A Strong, Replicable Instruction-Following Model》翻译与解读_一个处女座的程序猿的博客-程序员宅基地

| Alpaca (7B) (Taori et al., 2023) is a language model trained by fine-tuning LLaMA (7B) (Touvron et al., 2023a) on the constructed instruction dataset generated by InstructGPT (175B, text-davinci-003) (Ouyang et al., 2022). The fine-tuning process takes around 3 hours on an 8-card 80GB A100 device with mixed precision training and fully shared data parallelism. Alpaca (7B) achieves comparable performances to InstructGPT (175B,text-davinci-003) in terms of human evaluation. Specifically, Alpaca outperforms InstructGPT on the self-instruct dataset, garnering 90 instances of victories compared to 89 instances. |

Alpaca(7B)(Taori等,2023)是一种语言模型,通过对由InstructGPT(175B,text-davinci-003)(Ouyang等,2022)生成的构建指令数据集进行微调,使用LLaMA(7B)(Touvron等,2023a)完成微调。微调过程在8卡80GB A100设备上进行,使用混合精度训练和完全共享的数据并行技术,大约耗时3小时。 Alpaca(7B)在人类评估方面表现与InstructGPT(175B,text-davinci-003)相当。具体来说,Alpaca在自我指导数据集上优于InstructGPT,获得了90次胜利,而InstructGPT获得了89次。 |

4.5、Vicuna:基于LLaMA模型+利用ShareGPT的ChatGPT生成对话数据集(过滤低质得70K)进行微调,上下文扩到2K+GradientCheckpointing和FlashAttention(降低GPU成本)+8*A100-80G=24小时

LLMs之Vicuna:《Vicuna: An Open-Source Chatbot Impressing GPT-4 with 90%* ChatGPT Quality》翻译与解读

| Vicuna (13B) (Chiang et al., 2023) is a language model trained by fine-tuning LLaMA (13B) (Touvron et al., 2023a) on the conversational dataset generated by ChatGPT. The authors gathered user-shared ChatGPT conversations from ShareGPT.com, and got 70K conversation records after filtering out low-quality samples. LLaMA (13B) was fine-tuned on the constructed conversation dataset using a modified loss function tailored to multi-turn conversations. To better understand long context across multiple- turn dialog, the authors expanded the max context length from 512 to 2048. For training, the authors adopted the gradient checkpointing and flash attention (Dao et al., 2022) techniques to reduce the GPU memory cost in the fine-tuning process. The fine-tuning process takes 24 hours on an 8 × 80GB A100 device with fully shared data parallelism. The authors built a test set used exclusively to measure chatbots’ performances. They collected a test set composed by 8 question categories, such as Fermi problems, role play scenarios, coding/math tasks, etc, and then asked GPT-4 (OpenAI, 2023) to rate models’ responses considering helpfulness, relevance, accuracy, and detail. On the constructed test set, Vicuna (13B)outperforms Alpaca (13B) (Taori et al., 2023) and et al., 2022), open-ended generation, and LLaMA (13B) in 90% of the test questions, and generates equal or better rating responses compared to ChatGPT in 45% of the questions. |

Vicuna(13B)(Chiang等,2023)是一种语言模型,通过对由ChatGPT生成的对话数据集进行微调,使用LLaMA(13B)(Touvron等,2023a)完成微调。 作者从ShareGPT.com收集了用户分享的ChatGPT对话,并在滤除低质量样本后获得了70K个对话记录。使用经过修改的适用于多轮对话的损失函数对LLaMA(13B)进行了微调。 为了更好地理解多轮对话中的长上下文,作者将最大上下文长度从512扩展到2048。在训练过程中,作者采用了GradientCheckpointing和FlashAttention(Dao等,2022)技术,以减少微调过程中的GPU内存成本。微调过程在8个80GB A100设备上进行,使用完全共享的数据并行技术,耗时24小时。 作者构建了一个专门用于衡量聊天机器人表现的测试集。他们收集了一个由8个问题类别组成的测试集,例如费米问题、角色扮演情景、编码/数学任务等,然后要求GPT-4(OpenAI,2023)根据有用性、相关性、准确性和细节对模型的响应进行评分。在构建的测试集上,Vicuna(13B)在90%的测试问题中优于Alpaca(13B)、开放式生成以及LLaMA(13B),并在45%的问题中生成与ChatGPT相等或更好的评分响应。 |

4.6、GPT-4-LLM:基于LLaMA模型+利用Alpaca的指令和GPT-4生成指令数据集进行有监督微调→基于构建比较数据集(收集GPT-4、InstructGPT 等多个大模型的指令响应+GPT-4对响应评分1~10分)训练RM模型(PPO优化),8*A100-80G+AMP+DP=3小时

AIGC之GPT-4:GPT-4的简介(核心原理/意义/亮点/技术点/缺点/使用建议)、使用方法、案例应用(计算能力/代码能力/看图能力等)之详细攻略

AIGC之GPT-4:GPT-4的简介(核心原理/意义/亮点/技术点/缺点/使用建议)、使用方法、案例应用(计算能力/代码能力/看图能力等)之详细攻略_一个处女座的程序猿的博客-程序员宅基地

| GPT-4-LLM (7B) (Peng et al., 2023) is a language model trained by fine-tuning LLaMA (7B) (Touvron et al., 2023a) on the GPT-4 (OpenAI, 2023) generated instruction dataset. GPT-4-LLM is initialized with LLaMA, then fine-tuned in the following two steps: (1) supervised fine- tuning on the constructed instruction dataset. The authors used the instructions from Alpaca (Taori et al., 2023), and then collected responses using GPT-4. LLaMA is fine-tuned on the GPT-4 generated dataset. The fine-tuning process takes approximately three hours on an 8*80GB A100 machine with mixed precision and fully shared data parallelism. (2) optimizing the step-1 model using the proximal policy optimization (PPO) (Schulman et al., 2017) method, the authors first built a comparison dataset by collecting responses from GPT-4, InstructGPT (Ouyang et al., 2022), and OPT-IML (Iyer et al., 2022) to a collection of instructions and then asked GPT-4 to rate each response from 1 to 10. Using the ratings, a reward model is trained based on OPT (Zhang et al., 2022a). The fine-tuned model from Step 1 is optimized by using the reward model to compute the policy gradient. For evaluations, GPT-4-LLM (7B) outperforms not only the baseline model Alpaca (7B), but also larger models including Alpaca (13B) and LLAMA (13B). For automated evaluation, GPT- 4-LLM (7B) outperforms Alpaca by 0.2, 0.5, and 0.7 on User-Oriented-Instructions-252 (Wang et al., 2022c), Vicuna-Instructions (Chiang et al., 2023), and Unnatural Instructions (Honovich et al., 2022) datasets, respectively. For human evaluation, regarding aspects including helpfulness, honesty, and harmlessness, GPT-4-LLM outperforms Alpaca by 11.7, 20.9, and 28.6 respectively. |

GPT-4-LLM(7B)(Peng等,2023)是一种语言模型,通过对GPT-4(OpenAI,2023)生成的指令数据集进行微调,使用LLaMA(7B)(Touvron等,2023a)完成微调。 GPT-4-LLM首先使用LLaMA进行初始化,然后在以下两个步骤中进行微调: (1)在构建的指令数据集上进行监督微调。作者使用了Alpaca的指令,然后使用GPT-4生成了响应。LLaMA在由GPT-4生成的数据集上进行微调。微调过程在8个80GB A100设备上使用混合精度和完全共享的数据并行技术,大约耗时三小时。 (2)使用近端策略优化(PPO) (Schulman et al., 2017)方法优化step-1模型,作者首先通过收集GPT-4、InstructGPT (Ouyang et al., 2022)和OPT-IML (Iyer et al., 2022)对指令集合的响应构建比较数据集,然后要求GPT-4对每个响应进行1到10的评分。使用评级,基于OPT训练奖励模型(Zhang et al., 2022a)。通过使用奖励模型来计算策略梯度,对步骤1的微调模型进行优化。 在评估方面,GPT-4-LLM(7B)不仅优于基准模型Alpaca(7B),还优于更大的模型,包括Alpaca(13B)和LLAMA(13B)。在自动评估方面,GPT-4-LLM(7B)在用户导向的指令-252(Wang等,2022c)、Vicuna-指令(Chiang等,2023)和非自然指令(Honovich等,2022)数据集上分别优于Alpaca 0.2、0.5和0.7。在人类评估方面,关于可帮助性、诚实性和无害性等四个不同方面,GPT-4-LLM分别优于Alpaca 11.7、20.9和28.6。 |

4.7、Claude:基于数据集(52K指令和GPT-4生成的响应配对)进行SFT→基于构建比较数据集(收集GPT-3等多个大模型的指令响应+GPT-4对响应评分)训练RM模型(PPO优化),8*A100-80G+AMP+DP=8小时

| Claude is a language model trained by fine-tuning the pre-trained language model on an instruction dataset, aiming to generate helpful and harmless responses. The fine-tuning process consists of two stages: (1) supervised fine-tuning on the instruction dataset. The authors created an instruction dataset by collecting 52K different instructions, paired with responses generated by GPT-4. The fine- tuning process takes approximately eight hours on an 8-card 80GB A100 machine with mixed precision and fully shared data parallelism. (2) optimizing the step-1 model with the proximal policy optimization (Schulman et al., 2017) method. The authors first built a comparison dataset by collecting responses from multiple large language models (e.g., GPT-3 (Brown et al., 2020b)) to the given collection of instructions and then asking GPT-4 (OpenAI, 2023) to rate each response. Using the ratings, a reward model is trained. Then, the fine-tuned model from Step 1 is optimized using the reward model with the proximal policy optimization method. Claude generates more helpful and harmless responses compared to the backbone model. For automatic evaluations, Claude outperforms GPT- 3 by 7% on the RealToxicityPrompts (Gehman et al., 2020) in terms of toxicity. For human evaluations, regarding four different aspects, including following correct instructions, following explicit constraints, fewer hallucinations, and generating appropriate responses, Claude outperforms GPT-3 (Brown et al., 2020b) +10%,+20%, -20%, and +10%. respectively. |

Claude是一种语言模型,通过对预训练语言模型在指令数据集上进行微调,旨在生成有帮助且无害的响应。微调过程包括两个阶段: (1)在指令数据集上进行监督微调。作者通过收集了52K个不同的指令,并与GPT-4生成的响应配对,创建了一个指令数据集。微调过程在8卡80GB A100设备上使用混合精度和完全共享的数据并行技术,大约耗时八小时。 (2)使用近端策略优化(Schulman等,2017)方法优化步骤1中的模型。作者首先通过收集多个大型语言模型(如GPT-3(Brown等,2020b))对给定指令的响应,并要求GPT-4对每个响应进行评分,来构建比较数据集。使用这些评分,训练了一个奖励模型。然后,使用奖励模型使用近端策略优化方法优化步骤1中的微调模型。 与骨干模型相比,Claude生成的响应更有帮助且无害。在自动评估方面,Claude在RealToxicityPrompts(Gehman等,2020)方面优于GPT-3 7%。在人类评估方面,关于遵循正确指令、遵循明确约束、幻觉较少以及生成适当响应等四个不同方面,Claude分别优于GPT-3 +10%、+20%、-20%和+10%。 |

4.8、WizardLM:基于LLaMA模型+Evol-Instruct指令数据集(ChatGPT生成)微调,8*V100 GPU+Deepspeed Zero-3技术+3个epochs =70小时

| WizardLM (7B) (Xu et al., 2023a) is a language model trained by fine-tuning LLaMA (7B) (Touvron et al., 2023a) on the instruction dataset Evol-Instruct generated by ChatGPT (details see Section 3.7). It is fine-tuned on a subset (with 70K) of Evol-Instruct to enable a fair comparison with Vicuna (Chiang et al., 2023). The fine-tuning process takes approximately 70 hours on 3 epochs based on an 8 V100 GPU with the Deepspeed Zero-3 (Rasley et al., 2020) technique. During inference, the max generation length is 2048. To evaluate LLMs’ performances on complex instructions, the authors collected 218 human- generated instructions from real scenarios (e.g., open-source projects, platforms, and forums), called Evol-Instruct testset. Evaluations are conducted on the Evol-Instruct testset and Vicuna’s testset. For human evaluation, WizardLM outperforms Alpaca (7B) (Taori et al., 2023) and Vicuna (7B) by a large margins, and generates equal or better responses on 67% test samples compared to ChatGPT. Automatic evaluation is conducted by asking GPT-4 to rate LLMs’ reponses. Specifically, WizardLM gains performance boosts compared to Alpaca by +6.2%, +5.3% on the Evol-Instruct testset and Vicuna’s test sets. WizardLM achieves outperforms Vicuna by+5.8 on the Evol-Instruct testset and +1.7% on the Vicuna’s test set. |

WizardLM(7B)(Xu等,2023a)是一种语言模型,通过对由ChatGPT生成的Evol-Instruct指令数据集进行微调,使用LLaMA(7B)(Touvron等,2023a)完成微调(详见第3.7节)。它在Evol-Instruct的一个子集(含70K)上进行微调,以便与Vicuna(Chiang等,2023)进行公平比较。微调过程基于8个V100 GPU和Deepspeed Zero-3(Rasley等,2020)技术,在3个epochs 内耗时约70小时。推理过程中,最大生成长度为2048。 为了评估LLM在复杂指令上的性能,作者从实际情境(例如开源项目、平台和论坛)中收集了218个人工生成的指令,称为Evol-Instruct测试集。评估在Evol-Instruct测试集和Vicuna的测试集上进行。在人类评估中,WizardLM在绝大多数情况下都优于Alpaca(7B)(Taori等,2023)和Vicuna(7B),并且与ChatGPT相比,在67%的测试样本上生成相等或更好的响应。自动评估通过要求GPT-4对LLM的响应进行评分进行,其中更高的得分意味着更好的性能。具体来说,在Evol-Instruct测试集和Vicuna的测试集上,WizardLM在比较上优于Alpaca +6.2%、+5.3%。WizardLM在Evol-Instruct测试集上优于Vicuna +5.8%,在Vicuna的测试集上优于Vicuna +1.7%。 |

4.9、ChatGLM2:基于GLM模型+中英文指令(1:1)的双语数据集(1.4T的tokens),类似InstructGPT的三步微调策略+上下文长度扩展到32K+MQA/CM策略(降GPU成本)+需13GB的显存(INT4量化后需6GB)

LLMs之ChatGLM2:ChatGLM2-6B的简介、安装、使用方法之详细攻略

LLMs之ChatGLM2:ChatGLM2-6B的简介、安装、使用方法之详细攻略_一个处女座的程序猿的博客-程序员宅基地

| ChatGLM2 (6B) (Du et al., 2022) is a language model trained by fine-tuning GLM (6B) (Du et al., 2022) on a bilingual dataset that contains both English and Chinese instructions The bilingual instruction dataset contains 1.4T tokens, with a 1:1 ratio of Chinese to English. Instructions in the dataset are sampled from the question-answering and dialogue completion tasks. ChatGLM is initialized with GLM, then trained by the three-step fine-tuning strategy, which is akin to InstructGPT (Ouyang et al., 2022). To better model contextual information across multi-turn conversations, the authors expanded the maximum context length from 1024 to 32K. To reduce GPU memory cost in the fine-tuning stage, the authors employed multi-query attention and causal mask strategies. During inference, ChatGLM2 requires 13GB GPU memory with FP16 and supports conversations up to 8K in length with 6GB GPU memory using the INT4 model quantization technique. Evaluations are conducted on four English and Chinese benchmarks, including MMLU (English) (Hendrycks et al., 2020), C-Eval (Chinese) (Huang et al., 2023), GSM8K (Math) (Cobbe et al., 2021), and BBH (English) (Suzgun et al., 2022). ChatGLM2 (6B) outperforms GLM (6B) and the baseline model ChatGLM (6B) on all benchmarks. Specifically, ChatGLM2 outperforms GLM by+3.1 on MMLU, +5.0 on C-Eval, +8.6 on GSM8K,and +2.2 on BBH. ChatGLM2 achieves better performances than ChatGLM by +2.1, +1.2, +0.4,+0.8 on MMLU, C-Eval, GSM8K and BBH, respectively. |

ChatGLM2(6B)(Du等,2022)是一种语言模型,通过对包含英文和中文指令的双语数据集进行微调,使用GLM(6B)(Du等,2022)完成微调。双语指令数据集包含1.4T个标记,中英比例为1:1。数据集中的指令来自问答和对话完成任务。ChatGLM2初始化使用GLM,然后通过类似于InstructGPT(Ouyang等,2022)的三步微调策略进行训练。 为了更好地对多轮对话中的上下文信息进行建模,作者将最大上下文长度从1024扩展到32K。为了在微调阶段降低GPU内存成本,作者采用了多查询注意力MQA和因果掩码CM策略。在推理过程中,ChatGLM2需要13GB的GPU内存,使用FP16支持最大长度为8K的对话,使用INT4模型量化技术时只需要6GB的GPU内存。 评估在四个英文和中文基准数据集上进行,包括MMLU(英文)(Hendrycks等,2020)、C-Eval(中文)(Huang等,2023)、GSM8K(数学)(Cobbe等,2021)和BBH(英文)(Suzgun等,2022)。ChatGLM2(6B)在所有基准数据集上优于GLM(6B)和基准模型ChatGLM(6B)。具体来说,ChatGLM2在MMLU上优于GLM +3.1,在C-Eval上优于GLM +5.0,在GSM8K上优于GLM +8.6,在BBH上优于GLM +2.2。ChatGLM2在MMLU、C-Eval、GSM8K和BBH上的性能也优于ChatGLM +2.1、+1.2、+0.4、+0.8。 |

4.10、LIMA:基于LLaMA模型+基于表面对齐假设构建的指令数据集,提出了表面对齐假设并验证了其效果

| LIMA (65B) (Zhou et al., 2023) is a large language model trained by fine-tuning LLaMA (65B) (Touvron et al., 2023a) on an instruction dataset, which is constructed based on the proposed superficial alignment hypothesis. The superficial alignment hypothesis refers to the idea that the knowledge and capabilities of a model are almost acquired in the pre-training stage, while the alignment training (e.g., instruction fine-tuning) teaches models to generate responses under user-preferred formalizations. Based on the superficial alignment hypothesis, the authors claimed that large language models can generate user-satisfied responses by fine-tuning it on a small fraction of instruction data. Therefore, the authors built instruction train/valid/test sets to verify this hypothesis. Evaluations are conducted on the constructed test set. For human evaluations, LIMA outperforms InstructGPT and Alpaca by 17% and 19%, respectively. Additionally, LIMA achieves comparable results to BARD, Cladue, and GPT-4. For automatic evaluation, which is conducted by asking GPT-4 to rate responses and a higher rate score denotes better performance, LIMA outperforms InstructGPT and Alpaca by 20% and 36%, respectively, achieving comparable results to BARD, while underperforming Claude and GPT-4. Experimental results verify the proposed superficial alignment hypothesis. |

LIMA(65B)(Zhou等,2023)是一种大型语言模型,通过对基于所提出的表面对齐假设构建的指令数据集进行微调,使用LLaMA(65B)(Touvron等,2023a)完成微调。表面对齐假设指的是模型的知识和能力几乎在预训练阶段获得,而对齐训练(例如指令微调)则教导模型在用户首选的形式化下生成响应。基于这一表面对齐假设,作者声称可以通过在少量指令数据上进行微调来生成满足用户的响应。因此,作者构建了指令训练/验证/测试集来验证这一假设。 评估在构建的测试集上进行。在人类评估中,LIMA在有关方面优于InstructGPT和Alpaca分别达到17%和19%。此外,LIMA在自动评估方面,通过要求GPT-4对响应进行评分,得分越高表示性能越好,分别优于InstructGPT和Alpaca达到20%和36%,与BARD的性能相当,但不如Claude和GPT-4。实验结果验证了提出的表面对齐假设。 |

4.11、Others

OPT-IML:基于OPT模型+微调IML数据集

LLMs:《OPT: Open Pre-trained Transformer Language Models》翻译与解读

LLMs:《OPT: Open Pre-trained Transformer Language Models》翻译与解读_csv数据集下载_一个处女座的程序猿的博客-程序员宅基地

Dolly 2:基于Pythia模型+微调databricks-dolly-15k指令数据集

| OPT-IML (175B) (Iyer et al., 2022) is a large language model trained by fine-tuning the OPT (175B) (Zhang et al., 2022a) model on the constructed Instruction Meta-Learning (IML) dataset, which consists of over 1500 NLP tasks from 8 publicly available benchmarks such as PromptSource (Bach et al., 2022), FLAN (Longpre et al., 2023), and Super-NaturalInstructions (Wang et al., 2022d). After fine-tuning, OPT-IML outperforms OPT across all benchmarks. Dolly 2.0 (12B) (Conover et al., 2023a) is initialized with the pre-trained language model Pythia (12B) (Biderman et al., 2023), and fine- tuned on the instruction dataset databricks-dolly- 15k, which contains 7 categories of NLP tasks such as text classification and information extraction. After fine-tuning, Dolly 2.0 (12B) outperforms Pythia (12B) on the EleutherAI LLM Evaluation Harness benchmark (Gao et al., 2021) by a large margin, and achieves comparable performances to GPT-NEOX (20B) (Black et al., 2022), which has dolly-15k two times more parameters compared to Dolly 2.0 (12B). |

OPT-IML(175B)(Iyer等,2022)是一种大型语言模型,通过对构建的Instruction Meta-Learning(IML)数据集上的OPT(175B)(Zhang等,2022a)模型进行微调,该数据集包含来自8个公开可用基准数据集的1500多个NLP任务,如PromptSource(Bach等,2022)、FLAN(Longpre等,2023)和Super-NaturalInstructions(Wang等,2022d)。微调后,OPT-IML在所有基准数据集上优于OPT。 Dolly 2.0(12B)(Conover等,2023a)通过在databricks-dolly-15k指令数据集上进行微调,使用Pythia(12B)(Biderman等,2023)进行初始化,该数据集包含文本分类和信息提取等7类NLP任务。微调后,Dolly 2.0(12B)在EleutherAI LLM 评估套件基准(Gao等,2021)上远远优于Pythia(12B),并在性能上与拥有两倍参数的GPT-NEOX(20B)(Black等,2022)达到相当的性能。 |

Falcon-Instruct:基于Falcon模型+微调英语对话数据集(Baize数据集150M/1.5亿tokens+RefinedWeb数据集),降内存(Flash Attention+MQ)

LLMs之Data:《The RefinedWeb Dataset for Falcon LLM: Outperforming Curated Corpora with Web Data, and Web Data Only》翻译与解读

https://yunyaniu.blog.csdn.net/article/details/131137560

Guanaco:基于LLaMA+微调多语言对话数据集(源自包含52K英文指令数据对的Alpaca+534K的多轮对话的多语言)

LLMs之Guanaco:《QLoRA:Efficient Finetuning of Quantized LLMs》翻译与解读

LLMs之Guanaco:《QLoRA:Efficient Finetuning of Quantized LLMs》翻译与解读_一个处女座的程序猿的博客-程序员宅基地

| Falcon-Instruct (40B) (Almazrouei et al., 2023a) is a large language model trained by fine- tuning Falcon (40B) (Almazrouei et al., 2023b) on an English dialogue dataset, which contains 150 million tokens from the Baize dataset (Xu et al., 2023c), with an additional 5% of the data from the RefinedWeb dataset (Penedo et al., 2023). To reduce memory usage, the authors employed flash attention (Dao et al., 2022) and multi-query techniques. For evaluation, Falcon- Instruct (40B) achieved better performance on the Open LLM Leaderboard (Beeching et al., 2023) compared to the baseline model Falcon (40B), and outperforms the Guanaco (65B), which has more model parameters. Guanaco (7B) (JosephusCheung, 2021) is a multi-turn dialog language model trained by fine- tuning LLaMA (7B) (Touvron et al., 2023a) on the constructed multilingual dialogue dataset. The multilingual dialogue dataset comes from two sources: Alpaca (Taori et al., 2023), which contains 52K English instruction data pairs; and a multilingual (e.g., Simplified Chinese, Traditional Chinese, Japanese, German) dialogue data, which contains 534K+ multi-turn conversations. After fine-tuning, Guanaco is to generate role-specific responses and continuous responses on a given topic in multi-turn conversations. |

Falcon-Instruct (40B) (Almazrouei等人,2023a)是一个大型语言模型,它是通过对Falcon (40B) (Almazrouei等人,2023b)在英语对话数据集上进行微调训练而成的,该数据集包含来自Baize数据集(Xu等人,2023c)的1.5亿个令牌,以及来自RefinedWeb数据集(Penedo等人,2023)的额外5%的数据。为了减少内存使用,作者采用了Flash Attention (Dao et al., 2022)和多查询技术。在评估中,Falcon- Instruct (40B)在Open LLM排行榜(Beeching et al., 2023)上的表现优于基线模型Falcon (40B),优于模型参数更多的Guanaco (65B)。 Guanaco(7B)(JosephusCheung,2021)是一种多轮对话语言模型,通过在构建的多语言对话数据集上进行微调,使用LLaMA(7B)(Touvron等,2023a)进行初始化。多语言对话数据集来自两个来源:包含52K英文指令数据对的Alpaca(Taori等,2023);以及包含534K+多轮对话的多语言(例如简体中文、繁体中文、日语、德语)对话数据。微调后,Guanaco用于在多轮对话中生成针对角色的响应和给定主题的连续响应。 |

Minotaur:基于Starcoder Plus模型+微调WizardLM和GPTeacher-General-Instruc指令数据集

Nous-Herme:基于LLaMA模型+微调BiologyPhysicsChemistry子集的300K个指令

| Minotaur (15B) is a large language model trained by fine-tuning the Starcoder Plus (15B) (Li et al., 2023f) on open-source instruction datasets including WizardLM (Xu et al., 2023a) and GPTeacher-General-Instruct. For model inference, Minotaur supports a maximum context length of 18K tokens. Nous-Herme (13B) is a large language model trained by fine-tuning LLaMA (13B) (Touvron et al., 2023a) on an instruction dataset, which contains over 300k instructions, sampled from GPTeacher, CodeAlpaca (Chaudhary, 2023), GPT-4-LLM (Peng et al., 2023), Unnatural Instructions (Honovich et al., 2022), and BiologyPhysicsChemistry subsets in the Camel- AI (Li et al., 2023c). Responses are generated by GPT-4. For evaluations, Nous-Herme (13B) achieves comparable performances to GPT-3.5- turbo on multiple tasks like ARC challenge (Clark et al., 2018) and BoolQ (Clark et al., 2019). |

Minotaur(15B)是一种大型语言模型,通过在包括WizardLM(Xu等,2023a)和GPTeacher-General-Instruct在内的开源指令数据集上,微调Starcoder Plus(15B)(Li等,2023f)。在模型推理阶段,Minotaur支持最大上下文长度为18K标记。 Nous-Herme(13B)是一种大型语言模型,通过在基于GPTeacher、CodeAlpaca(Chaudhary,2023)、GPT-4-LLM(Peng等,2023)、Unnatural Instructions(Honovich等,2022)以及Camel-AI(Li等,2023c)中的BiologyPhysicsChemistry子集中,包含超过300K个指令的指令数据集上进行微调,使用LLaMA(13B)(Touvron等,2023a)进行初始化。评估结果显示,Nous-Herme(13B)在多个任务(如ARC挑战和BoolQ)上与GPT-3.5-turbo的性能相当。 |

TÜLU :基于OPT 模型+微调混合指令数据集

YuLan-Chat:基于LLaMA模型+微调双语数据集(25万个中英文指令对)

| TÜLU (6.7B) (Wang et al., 2023c) is a large language model trained by fine-tuning OPT (6.7B) (Zhang et al., 2022a) on a mixed instruction dataset, which contains FLAN V2 (Longpre et al., 2023), CoT (Wei et al., 2022), Dolly (Conover et al., 2023a), Open Assistant-1, GPT4-Alpaca, Code-Alpaca (Chaudhary, 2023), and ShareGPT. After fine-tuning, TÜLU (6.7B) reaches on average 83% of ChatGPT’s performance and 68% of GPT- 4’s performance. YuLan-Chat (13B) (YuLan-Chat-Team, 2023) is a language model trained by fine-tuning LLaMA (13B) (Touvron et al., 2023a) on a constructed bilingual dataset, which contains 250,000 Chinese- English instruction pairs. After fine-tuning, YuLan-Chat-13B achieves comparable results to the state-of-the-art open-source model ChatGLM (6B) (Du et al., 2022), and outperforms Vicuna (13B) (Chiang et al., 2023) on the English BBH3K (BBH3K is a subset of BBH benchmark (Srivastava et al., 2022)) dataset. |

TÜLU (6.7B) (Wang等人,2023c)是在混合指令数据集上通过对OPT (6.7B) (Zhang等人,2022a)进行微调而训练的大型语言模型,该数据集包含FLAN V2 (Longpre等人,2023)、CoT (Wei等人,2022)、Dolly (Conover等人,2023a)、Open Assistant-1、GPT4-Alpaca、Code-Alpaca (Chaudhary, 2023)和ShareGPT。经过微调,TÜLU (6.7B)平均达到ChatGPT的83%和GPT- 4的68%的性能。 YuLan-Chat (13B) (YuLan-Chat- team, 2023)是通过微调LLaMA (13B) (Touvron et al., 2023a)在包含25万个中英文指令对的构建双语数据集上训练的语言模型。经过微调,YuLan-Chat-13B在英语BBH3K (BBH3K是BBH基准(Srivastava et al., 2022)的一个子集)数据集上取得了与最先进的开源模型ChatGLM (6B) (Du等人,2022)相当的结果,并且优于Vicuna (13B) (Chiang等人,2023)。 |

MOSS:微调对话指令的双语对话语言模型

Airoboros:基于LLaMA+微调Self-instruct数据集

UltraLM:基于LLAMA+微调,

| MOSS (16B) is a bilingual dialogue language model, which aims to engage in multi-turn conversations and utilize various plugins, trained by fine-tuning on dialogue instructions. After fine- tuning, MOSS outperforms the backbone model and generates responses that better align with human preferences. Airoboros (13B) is a large language model trained by fine-tuning LLAMA (13B) (Touvron et al., 2023a) on the Self-instruct dataset (Wang et al., 2022c). After fine-tuning, Airoboros significantly outperforms LLAMA (13B) (Touvron et al., 2023a) on all benchmarks and achieves highly comparable results to models fine-tuned specifically for certain benchmarks. UltraLM (13B) (Ding et al., 2023a) is a large language model trained by fine-tuning LLAMA (13B) (Touvron et al., 2023a). For evaluation, UltraLM (13B) outperforms Dolly (12B) (Conover et al., 2023a) and achieves the winning rate up to 98%. Additionally, it surpasses the previous best open-source models (i.e., Vicuna (Chiang et al., 2023) and WizardLM (Xu et al., 2023a)) with winning rates of 9% and 28%, respectively. |

MOSS(16B)是一种双语对话语言模型,旨在进行多轮对话并利用各种插件,在对话指令上进行微调。微调后,MOSS优于基准模型,并生成与人类偏好更加一致的响应。 Airoboros(13B)通过在Self-instruct数据集上进行微调,使用LLaMA(13B)(Touvron等,2023a)进行初始化。微调后,Airoboros在所有基准数据集上明显优于LLAMA(13B),并且与专门针对某些基准测试进行微调的模型取得了高度可比性的结果。 UltraLM(13B)(Ding等,2023a)通过对LLAMA(13B)(Touvron等,2023a)进行微调获得,微调后在性能上优于Dolly(12B)(Conover等,2023a)并达到98%的胜率。此外,它在性能上超越了之前的最佳开源模型(即Vicuna和WizardLM),其胜率分别为9%和28%。 |

5、Multi-modality Instruction Fine-tuning多模态指令微调

5.1、Multi-modality Datasets多模态数据集

MUL-TIINSTRUCT—多模态指令微调数据集—OFA模型:由62个不同的多模态任务组成+统一的序列到序列格式

| MUL-TIINSTRUCT (Xu et al., 2022) is a multimodal instruction tuning dataset consisting of 62 diverse multimodal tasks in a unified seq- to-seq format. This dataset covers 10 broad categories and its tasks are derived from 21 existing open-sourced datasets. Each task is equipped with 5 expert-written instructions. For the existing tasks, the authors use the input/output pairs from their available open-source datasets to create instances. While for each new task, the authors create 5k to 5M instances by extracting the necessary information from instances of existing tasks or reformulating them. The MUL-TIINSTRUCT dataset has demonstrated its efficiency in enhancing various transfer learning technique. For example, fine-tuning the OFA model (930M) (Wang et al., 2022a) using various transfer learning strategies such as Mixed Instruction Tuning and Sequential Instruction Tuning on MUL-TIINSTRUCT improve the zero- shot performance across all unseen tasks. On commonsense VQA task, OFA fine-tuned on MUL- TIINSTRUCT achieves 50.60 on RougeL and 31.17 on accuracy, while original OFA achieves 14.97 on RougeL and 0.40 on accuracy. |

MUL-TIINSTRUCT(Xu等,2022)是一个多模态指令微调数据集,由62个不同的多模态任务组成,以统一的序列到序列格式呈现。该数据集涵盖10个广泛的类别,其任务来自21个现有的开源数据集。每个任务配备了5个专家编写的指令。 >> 对于现有任务,作者使用其可用的开源数据集中的输入/输出对创建实例。 >> 而对于每个新任务,作者通过从现有任务的实例中提取必要信息或重新构建它们来创建5k到5M个实例。 MUL-TIINSTRUCT数据集已经证明在增强各种迁移学习技术方面的有效性。例如,使用Mixed Instruction Tuning和Sequential Instruction Tuning等各种迁移学习策略对OFA模型(930M)(Wang等,2022a)在MUL-TIINSTRUCT上进行微调,改进了所有未见任务的零-shot性能。在常识视觉问答任务上,经过MUL-TIINSTRUCT微调的OFA在RougeL上达到50.60,在准确性上达到31.17,而原始OFA在RougeL上只有14.97,在准确性上只有0.40。 |

PMC-VQA—大规模的医学视觉问答数据集—MedVInT模型:227k个图像-问题对和149k个图像,从PMC-OA收集图像-标题对+ChatGPT生成问题-答案对+手工验证

| PMC-VQA (Zhang et al., 2023c) is a large- scale medical visual question-answering dataset that comprises 227k image-question pairs of 149k images, covering various modalities or diseases. The dataset can be used for both open-ended and multiple-choice tasks. The pipeline for generating the PMC-VQA dataset involves collecting image-caption pairs from the PMC-OA (Lin et al., 2023) dataset, using ChatGPT to generate question-answer pairs, and manually verifying a subset of the dataset for quality. The authors propose a generative-based model MedVInT for medical visual understanding by aligning visual information with a large language model. MedVInT pretrained on PMC- VQA achieves state-of-the-art performance and outperforms existing models on VQA-RAD (Lau et al., 2018) and SLAKE (Liu et al., 2021a) benchmarks, with 81.6% accuracy on VQA-RAD and 88.0% accuracy on SLAKE. |

PMC-VQA(Zhang等,2023c)是一个大规模的医学视觉问答数据集,包括227k个图像-问题对和149k个图像,涵盖了各种模态或疾病。该数据集可用于开放式和多项选择任务。生成PMC-VQA数据集的流程涉及从PMC-OA(Lin等,2023)数据集中收集图像-标题对,使用ChatGPT生成问题-答案对,并对数据集的子集进行手工验证以确保质量。作者提出了一种基于生成的模型MedVInT,通过将视觉信息与大型语言模型进行对齐,实现医学视觉理解。在经过PMC-VQA微调的MedVInT上实现了最新的性能,并在VQA-RAD(Lau等,2018)和SLAKE(Liu等,2021a)基准上优于现有模型,VQA-RAD上的准确率为81.6%,SLAKE上的准确率为88.0%。 |

LAMM—2D图像和3D点云理解:包含186K个语言-图像指令-响应对,以及10K个语言-点云指令-响应对

| LAMM (Yin et al., 2023) is a comprehensive multi-modal instruction tuning dataset for 2D image and 3D point cloud understanding. LAMM contains 186K language-image instruction- response pairs, and 10K language-point cloud instruction-response pairs. The authors collect images and point clouds from publicly available datasets and use the GPT-API and self-instruction methods to generate instructions and responses based on the original labels from these datasets. LAMM-Dataset includes data pairs for commonsense knowledge question answering by incorporating a hierarchical knowledge graph label system from the Bamboo (Zhang et al., 2022b) dataset and the corresponding Wikipedia description. The authors also propose the LAMM- Benchmark, which evaluates existing multi-modal language models (MLLM) on various computer vision tasks. It includes 9 common image tasks and 3 common point cloud tasks, and LAMM- Framework, a primary MLLM training framework that differentiates the encoder, projector, and LLM finetuning blocks for different modalities to avoid modality conflicts. |

LAMM(Yin等,2023)是一个全面的多模态指令微调数据集,用于2D图像和3D点云理解。LAMM包含186K个语言-图像指令-响应对,以及10K个语言-点云指令-响应对。作者从公开可用的数据集中收集图像和点云,并使用GPT-API和自我指导方法根据这些数据集的原始标签生成指令和响应。LAMM-Dataset还包括了常识知识问答的数据对,通过将分层知识图标签系统从Bamboo(Zhang等,2022b)数据集和相应的维基百科描述整合进来。作者还提出了LAMM-Benchmark,用于评估现有的多模态语言模型(MLLM)在各种计算机视觉任务上的性能。其中包括9个常见的图像任务和3个常见的点云任务,以及LAMM-Framework,一个主要的MLLM训练框架,用于为不同的模态区分编码器、投影器和LLM微调模块,以避免模态冲突。 |

5.2、Multi-modality Instruction Fine-tuning Models多模态指令微调模型

InstructPix2Pix条件扩散模型:基于Stable Diffusion+微调多模态数据集(综合两大模型能力【GPT-3、Stable Diffusion】来生成)

| InstructPix2Pix (983M) (Brooks et al., 2022) is a conditional diffusion model trained by fine-tuning Stable Diffusion (983M) (Rombach et al., 2022) on a constructed multi-modal dataset that contains more than 450K text editing instructions and corresponding images before and after the edit. The authors combine the abilities of two large-scale pre- trained models, a language model GPT-3 (Brown et al., 2020b) and a text-to-image model Stable Diffusion (Rombach et al., 2022), to generate the the training dataset. GPT-3 is fine-tuned to generate text edits based on image prompts, while Stable Diffusion is used to convert the generated text edits into actual image edits. InstructPix2Pix is then trained on this generated dataset using a latent diffusion objective. Figure 5 shows the process of generating image editing dataset and training the diffusion model on that dataset. The authors compares the proposed method qualitatively with previous works such as SDEdit (Meng et al., 2022) and Text2Live (Bar-Tal et al., 2022), highlighting the ability of the model to follow image editing instructions instead of descriptions of the image or edit layer. The authors also presents quantitative comparisons with SDEdit (Meng et al., 2022) using metrics measuring image consistency and edit quality. |

InstructPix2Pix(983M)(Brooks等,2022)是一种条件扩散模型,通过在构建的多模态数据集上对Stable Diffusion(983M)(Rombach等,2022)进行微调而训练得到,该数据集包含超过450K个文本编辑指令和相应的编辑前后图像。作者将两个大规模预训练模型的能力结合在一起,即语言模型GPT-3(Brown等,2020b)和文本到图像模型Stable Diffusion(Rombach等,2022),以生成训练数据集。GPT-3被微调以根据图像提示生成文本编辑,而Stable Diffusion则用于将生成的文本编辑转换为实际图像编辑。然后,InstructPix2Pix在此生成的数据集上使用潜在扩散目标进行训练。图5展示了生成图像编辑数据集的过程以及在该数据集上训练扩散模型的过程。 作者将所提出的方法与之前的作品(如SDEdit和Text2Live)进行了定性比较,强调该模型能够按照图像编辑指令进行操作,而不是图像或编辑层的描述。作者还使用衡量图像一致性和编辑质量的指标对其与SDEdit进行了定量比较。 |

LLaVA:基于CLIP视觉编码器和LLaMA语言解码器模型+微调158K个独特的语言-图像指令-跟随样本的教学视觉语言数据集(利用GPT-4转换格式)

| LLaVA (13B) (Liu et al., 2023b) is a large multimodal model developed by connecting the visual encoder of CLIP (400M) (Radford et al., 2021) with the language decoder LLaMA (7B) (Touvron et al., 2023a). LLaVA is fine-tuned using the generated instructional vision-language dataset consisted of 158K unique language-image instruction-following samples. The data collection process involved creating conversation, detailed description, and complex reasoning prompts. GPT-4 is used to convert image-text pairs into appropriate instruction-following format for this dataset. Visual features such as captions and bounding boxes were used to encode images. LLaVA yields a 85.1% relative score compared with GPT-4 on a synthetic multimodal instruction following dataset. When fine-tuned on Science QA, the synergy of LLaVA and GPT-4 achieves a new state-of-the-art accuracy of 92.53%. |

LLaVA(13B)(Liu等,2023b)是一个大型多模态模型,通过将CLIP(400M)(Radford等,2021)的视觉编码器与LLaMA(7B)(Touvron等,2023a)的语言解码器相连接而开发。LLaVA通过生成包含158K个独特的语言-图像指令-跟随样本的教学视觉语言数据集进行微调。 数据收集过程涉及创建会话、详细描述和复杂推理提示。使用GPT-4将图像-文本对转换为适用于此数据集的适当的指令跟随格式。使用标题和边界框等视觉特征来编码图像。LLaVA在合成多模态指令跟随数据集上相对于GPT-4的得分为85.1%。在Science QA上进行微调时,LLaVA和GPT-4的协同作用实现了92.53%的新的最高准确率。 |

Video-LLaMA多模态框架:由两个分支编码器组成(视觉-语言VL分支和音频-语言AL分支+语言解码器LLaMA)

| Video-LLaMA (Zhang et al., 2023b) is a multimodal framework that enhances large language models with the ability to understand both visual and auditory content in videos. The architecture of Video-LLaMA consists of two branche encoders: the Vision-Language (VL) Branch and the Audio-Language (AL) Branch, and a language decoder (Vicuna (7B/13B) (Chiang et al., 2023), LLaMA (7B) (Touvron et al., 2023a), etc.). The VL Branch includes a frozen pre-trained image encoder (pre-trained vision component of BLIP-2 (Li et al., 2023d), which includes a ViT-G/14 and a pre-trained Q-former), a position embedding layer, a video Q-former and a linear layer. The AL Branch includes a pre- trained audio encoder (ImageBind (Girdhar et al., 2023)) and an Audio Q-former. Figure 6 shows the overall architecture of Video-LLaMA with Vision-Language Branch and Audio-Language Branch. The VL Branch is trained on the Webvid-2M (Bain et al., 2021) video caption dataset with a video-to-text generation task, and fine-tuned on the instruction-tuning data from MiniGPT-4 (Zhu et al., 2023), LLaVA (Liu et al., 2023b) and VideoChat (Li et al., 2023e). The AL Branch is trained on video/image instru- caption data to connect the output of ImageBind to language decoder. After finetuning, Video- LLaMA can perceive and comprehend video content, demonstrating its ability to integrate auditory and visual information, understand static images, recognize common-knowledge concepts, and capture temporal dynamics in videos. |

Video-LLaMA(Zhang等,2023b)是一个多模态框架,通过在视频中理解视觉和听觉内容来增强大型语言模型的能力。Video-LLaMA的架构由两个分支编码器组成:视觉-语言(VL)分支和音频-语言(AL)分支,以及一个语言解码器(Vicuna(7B/13B)(Chiang等,2023),LLaMA(7B)(Touvron等,2023a)等)。 VL分支包括一个冻结的预训练图像编码器(BLIP-2的预训练视觉组件(Li等,2023d)),其中包括一个ViT-G/14和一个预训练的Q-former)、一个位置嵌入层、一个视频Q-former和一个线性层。 AL分支包括一个预训练的音频编码器(ImageBind(Girdhar等,2023))和一个音频Q-former。图6展示了Video-LLaMA的整体架构,包括视觉-语言分支和音频-语言分支。 VL分支在Webvid-2M(Bain等,2021)视频字幕数据集上进行训练,进行视频到文本生成任务,并在来自MiniGPT-4(Zhu等,2023)、LLaVA(Liu等,2023b)和VideoChat(Li等,2023e)的指令微调数据上进行微调。 AL分支在视频/图像指令-字幕数据上进行训练,将ImageBind的输出连接到语言解码器。 微调后,Video-LLaMA能够感知和理解视频内容,展示了其整合听觉和视觉信息、理解静态图像、识别常识概念以及捕捉视频中的时间动态的能力。 |

InstructBLIP视觉-语言指令微调框架:基于BLIP-2模型(图像编码器+LLM+Query Transformer)

| InstructBLIP (1.2B) (Dai et al., 2023) is a vision-language instruction tuning framework initialized with a pre-trained BLIP-2 (Li et al., 2023d)) model consisting of an image encoder, an LLM (FlanT5 (3B/11B) (Chung et al., 2022) or Vicuna (7B/13B) (Chiang et al., 2023)), and a Query Transformer (Q-Former) to bridge the two. As shown in Figure 7, the Q-Former extracts instruction-aware visual features from the output embeddings of the frozen image encoder, and feeds the visual features as soft prompt input to the frozen LLM. The authors evaluate the proposed InstructBLIP model on a variety of vision- language tasks, including image classification, image captioning, image question answering, and visual reasoning. They use 26 publicly available datasets, dividing them into 13 held-in and 13 held-out datasets for training and evaluation. The authors demonstrate that InstructBLIP achieves state-of-the-art zero-shot performance on a wide range of vision-language tasks. InstructBLIP yields an average relative improvement of 15.0% when compared to BLIP-2, smallest InstructBLIP (4B) outperforms Flamingo (80B) (Alayrac et al., 2022) on all six shared evaluation datasets with an average relative improvement of 24.8%. |

InstructBLIP(1.2B)(Dai等,2023)是一个视觉-语言指令微调框架,其初始化为一个预训练的BLIP-2(Li等,2023d)模型,包括图像编码器、LLM(FlanT5(3B/11B)(Chung等,2022)或Vicuna(7B/13B)(Chiang等,2023))和一个Query Transformer(Q-Former)以连接两者。如图7所示,Q-Former从冻结的图像编码器的输出嵌入中提取指令感知的视觉特征,并将视觉特征作为软提示输入到冻结的LLM中。 作者在各种视觉-语言任务上评估了所提出的InstructBLIP模型,包括图像分类、图像字幕生成、图像问答和视觉推理。他们使用了26个公开可用的数据集,将其分为13个用于训练和13个用于评估的数据集。作者证明InstructBLIP在各种视觉-语言任务上实现了最新的零-shot性能。相较于BLIP-2,InstructBLIP平均相对改进15.0%,最小的InstructBLIP(4B)在六个共享评估数据集上优于Flamingo(80B)(Alayrac等,2022),平均相对改进为24.8%。 |

Otter:基于OpenFlamingo模型+只微调Perceiver重采样模块、交叉注意力层和输入/输出嵌入

| Otter (Li et al., 2023b) is a multi-modal model trained by fine-tuning OpenFlamingo (9B) (Awadalla et al., 2023), with the language and vision encoders frozen and only fine-tuning the Perceiver resampler module, cross-attention layers, and input/output embeddings. The authors organize diverse multi-modal tasks covering 11 categories and build multi-modal in-context instruction tuning datasets MIMIC-IT of 2.8M multimodal instruction-response pairs, which consists of image- instruction-answer triplets, where the instruction- answer is tailored to the image. Each data sample also includes context, which contains a series of image-instruction-answer triplets that contextually correlate with the queried triplet. Otter demonstrates the ability to follow user instructions more accurately and provide more detailed descriptions of images compared to OpenFlamingo (Awadalla et al., 2023). |